This is the multi-page printable view of this section. Click here to print.

References

- 1: Compatibility

- 2: Parameters

- 3: Extension List

- 4: File Hierarchy

- 5: Comparison

- 6: Cost Analysis

- 7: Glossary

1 - Compatibility

Overview

Pigsty recommends using Linux kernel, amd64 arch, and RockyLinux 9.5, Debian 12 or Ubuntu 22.04 as base OS.

Kernel Architecture Compatibility: Linux kernel, amd64 architecture (x86_64)

EL Distribution Support: EL7, EL8, EL9; (RHEL, Rocky, CentOS, Alma, Oracle, Anolis,…)

Debian Distribution Support: Ubuntu 24.04 noble, 22.04 jammy, 20.04 focal; Debian 12 bookworm and 11 bullseye.

Pigsty does not use any virtualization or containerization technologies, running directly on the bare OS. Supported operating systems include EL 7/8/9 (RHEL, Rocky, CentOS, Alma, Oracle, Anolis,…), Ubuntu 24.04 / 20.04 / 22.04 & Debian 11/12. EL is our long-term supported OS, while support for Ubuntu/Debian systems was introduced in the recent v2.5 version. The main difference between EL and Debian distributions is the significant variation in package names, as well as the default availability of PostgreSQL extensions.

If you have advanced compatibility requirements, such as using specific operating system distributions, major versions, or minor versions, we offer advance compatibility support options.

Kernel & Arch Support

Currently, Pigsty supports the Linux kernel and the x86_64 / amd64 chip architecture.

MacOS and Windows operating systems can install Pigsty via Linux virtual machines/containers. We provide Vagrant local sandbox support, allowing you to use virtualization software like Vagrant and Virtualbox to effortlessly bring up the deployment environment required by Pigsty on other operating systems.

ARM64 Support

We have partial support forarm64 architecture with our pro service, (EL9 & Debian12 & Ubuntu 22.04)

EL Distribution Support

The EL series operating systems are Pigsty’s primary support target, including compatible distributions such as Red Hat Enterprise Linux, RockyLinux, CentOS, AlmaLinux, OracleLinux, Anolis, etc. Pigsty supports the latest three major versions: 7, 8, 9

- EL9: RHEL, RockyLinux, AlmaLinux (Rocky 9.5 recommended)

- EL8: RHEL, RockyLinux, AlmaLinux, Anolis (Rocky 8.10 recommended)

- EL7: RHEL, CentOS 7.9 (CentOS 7.9 recommended)

| Code | EL Distros | Minor | PG17 | PG16 | PG15 | PG14 | PG13 | PG12 | Limitation |

|---|---|---|---|---|---|---|---|---|---|

| EL9 | RHEL 9 / Rocky9 / Alma9 | 9.5 | Standard Feature Set | ||||||

| EL8 | RHEL 8 / Rocky8 / Alma8 / Anolis8 | 8.10 | Missing pljava extension |

||||||

| EL7 | RHEL7 / CentOS7 | 7.9 | EOLed OS, PG16/17, Rust and many other extensions unavailable |

RockyLinux 9.5 Recommended

Rocky 9.5 achieves a good balance between system reliability/stability and the novelty/comprehensiveness of software versions. It’s recommended for EL series users to default to this system version.EL7 End of Life!

Red Hat Enterprise Linux 7 is end of maintenance, Pigsty will no longer have official EL7 support in the open-source version. We have EL7 legacy support in our pro service.Debian Distribution Support

Pigsty supports Ubuntu / Debian series operating systems and their compatible distributions, currently supporting the two most recent LTS major versions, namely:

- U22: Ubuntu 22.04 LTS jammy (24.04.1)

- U22: Ubuntu 22.04 LTS jammy (22.04.3 Recommended)

- U20: Ubuntu 20.04 LTS focal (20.04.6)

- D12: Debian 12 bookworm (12.4)

- D11: Debian 11 bullseye (11.8)

| Code | Debian Distros | Minor | PG17 | PG16 | PG15 | PG14 | PG13 | PG12 | Limitations |

|---|---|---|---|---|---|---|---|---|---|

| U24 | Ubuntu 24.04 (noble) | 24.04.1 | Missing PGML, citus, topn, timescale_toolkit | ||||||

| U22 | Ubuntu 22.04 (jammy) | 22.04.5 | Standard Debian series feature set | ||||||

| U20 | Ubuntu 20.04 (focal) | 20.04.6 | EOL, Some extensions require online installation | ||||||

| D12 | Debian 12 (bookworm) | 12.4 | Missing polardb, wiltondb/babelfish, and official PGML support | ||||||

| D11 | Debian 11 (bullseye) | 11.8 | EOL |

Ubuntu 22.04 LTS Recommended

Ubuntu 22.04 comes with the most comp PostgresML has official support for the AL/ML extensionpgml for Ubuntu 22.04, so users with related needs are advised to use Ubuntu 22.04.3.

Ubuntu 20.04 nearing EOL

Ubuntu 20.04 focal is not recommended, it will end of standard support @ Apr 2025, Check Ubuntu Release Cycle for details

Besides, Ubuntu 20.04 has missing dependencies for the postgresql-16-postgis and postgresql-server-dev-16 packages, requiring online installation in a connected environment.

If your environment does not have internet access, and you need to use the PostGIS extension, please use this operating system with caution.

Vagrant Boxes

When deploying Pigsty on cloud servers, you might consider using the following operating system images in Vagrant, which are also the images used for Pigsty’s development, testing, and building.

generic/centos7: CentOS 7.9generic/rocky8: Rocky 8.10generic/rocky9: Rocky 9.4generic/debian11: Debian 11.8generic/debian12: Debian 12.4generic/ubuntu2004: Ubuntu 20.04.6generic/ubuntu2204: Ubuntu 22.04.3bento/ubuntu-24.04: Ubuntu 24.04

Terraform Images

When deploying Pigsty on cloud servers, you might consider using the following operating system base images in Terraform, using Alibaba Cloud as an example:

- CentOS 7.9 :

centos_7_9_x64_20G_alibase_20240628.vhd - Rocky 8.10 :

rockylinux_8_10_x64_20G_alibase_20240923.vhd - Rocky 9.5 :

rockylinux_9_5_x64_20G_alibase_20240925.vhd - Ubuntu 20.04 :

ubuntu_20_04_x64_20G_alibase_20240925.vhd - Ubuntu 22.04 :

ubuntu_22_04_x64_20G_alibase_20240926.vhd - Ubuntu 24.04 :

ubuntu_24_04_x64_20G_alibase_20240923.vhd - Debian 11.11 :

debian_11_11_x64_20G_alibase_20240923.vhd - Debian 12.7 :

debian_12_7_x64_20G_alibase_20240927.vhd - Anolis 8.8 :

anolisos_8_9_x64_20G_rhck_alibase_20240724.vhd

2 - Parameters

Please refer to the parameter list of each feature module:

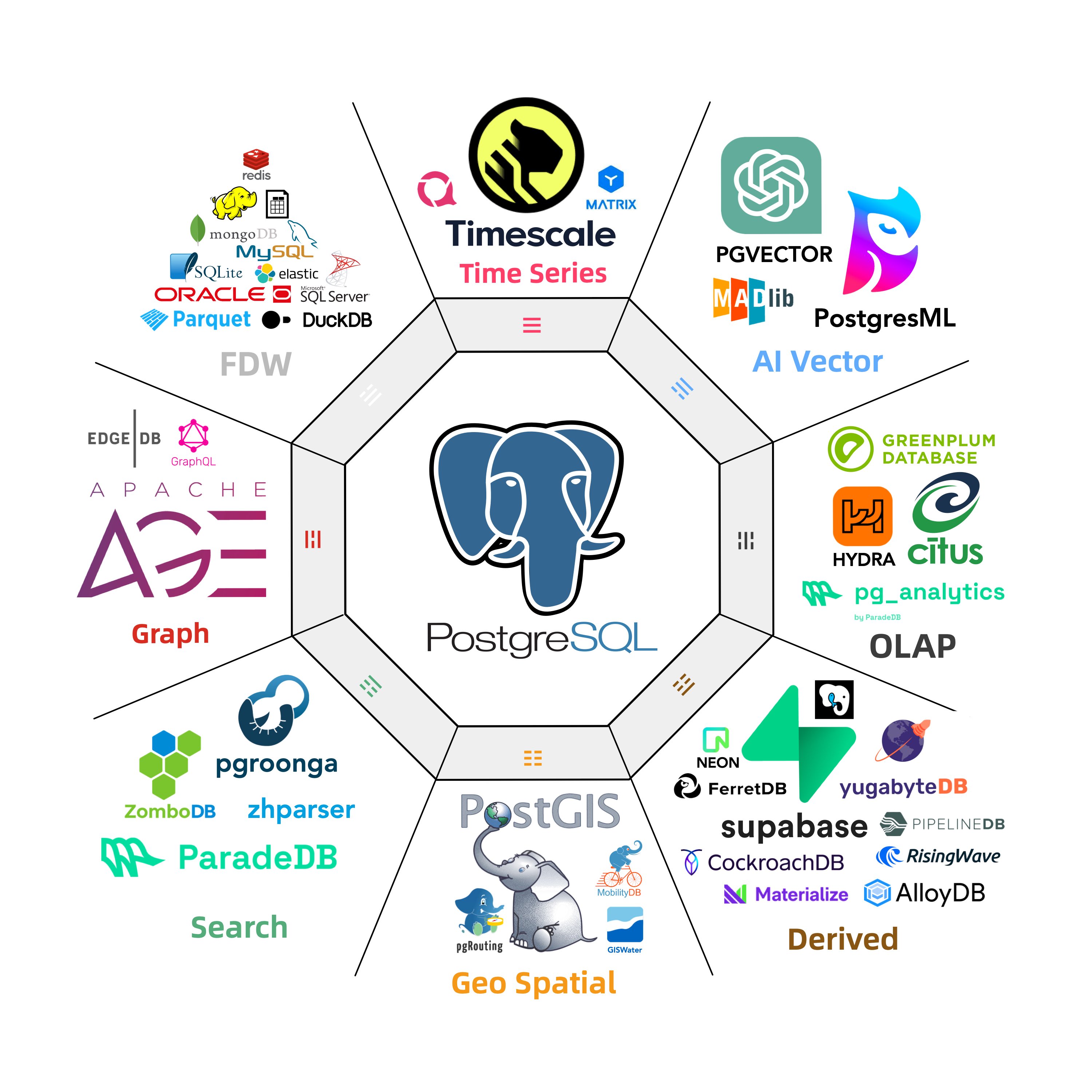

3 - Extension List

Pigsty has rich support for PostgreSQL extensions, including 400* RPM extensions and 189 DEB extensions.

4 - File Hierarchy

Pigsty FHS

#------------------------------------------------------------------------------

# pigsty

# ^-----@app # extra demo application resources

# ^-----@bin # bin scripts

# ^-----@docs # document (can be docsified)

# ^-----@files # ansible file resources

# ^-----@pigsty # pigsty config template files

# ^-----@prometheus # prometheus rules definition

# ^-----@grafana # grafana dashboards

# ^-----@postgres # /pg/bin/ scripts

# ^-----@migration # pgsql migration task definition

# ^-----@pki # self-signed CA & certs

# ^-----@roles # ansible business logic

# ^-----@templates # ansible templates

# ^-----@vagrant # vagrant local VM template

# ^-----@terraform # terraform cloud VM template

# ^-----configure # configure wizard script

# ^-----ansible.cfg # default ansible config file

# ^-----pigsty.yml # default config file

# ^-----*.yml # ansible playbooks

#------------------------------------------------------------------------------

# /etc/pigsty/

# ^-----@targets # file based service discovery targets definition

# ^-----@dashboards # static grafana dashboards

# ^-----@datasources # static grafana datasource

# ^-----@playbooks # extra ansible playbooks

# ^-----@pgadmin # pgadmin server list and password

#------------------------------------------------------------------------------

CA FHS

Pigsty’s self-signed CA is located on files/pki/ directory under pigsty home.

YOU HAVE TO SECURE THE CA KEY PROPERLY: files/pki/ca/ca.key,

which is generated by the ca role during install.yml or infra.yml.

# pigsty/files/pki

# ^-----@ca # self-signed CA key & cert

# ^[email protected] # VERY IMPORTANT: keep it secret

# ^[email protected] # VERY IMPORTANT: trusted everywhere

# ^-----@csr # signing request csr

# ^-----@misc # misc certs, issued certs

# ^-----@etcd # etcd server certs

# ^-----@minio # minio server certs

# ^-----@nginx # nginx SSL certs

# ^-----@infra # infra client certs

# ^-----@pgsql # pgsql server certs

# ^-----@mongo # mongodb/ferretdb server certs

# ^-----@mysql # mysql server certs

The managed nodes will have the following files installed:

/etc/pki/ca.crt # all nodes

/etc/pki/ca-trust/source/anchors/ca.crt # soft link and trusted anchor

All infra nodes will have the following certs:

/etc/pki/infra.crt # infra nodes cert

/etc/pki/infra.key # infra nodes key

In case of admin node failure, you have to keep files/pki and pigsty.yml safe.

You can rsync them to another admin node to make a backup admin node.

# run on meta-1, rsync to meta2

cd ~/pigsty;

rsync -avz ./ meta-2:~/pigsty

NODE FHS

Node main data dir is specified by node_data parameter, which is /data by default.

The data dir is owned by root with mode 0777. All modules’ local data will be stored under this directory by default.

/data

# ^-----@postgres # postgres main data dir

# ^-----@backups # postgres backup data dir (if no dedicated backup disk)

# ^-----@redis # redis data dir (shared by multiple redis instances)

# ^-----@minio # minio data dir (default when in single node single disk mode)

# ^-----@etcd # etcd main data dir

# ^-----@prometheus # prometheus time series data dir

# ^-----@loki # Loki data dir for logs

# ^-----@docker # Docker data dir

# ^-----@... # other modules

Prometheus FHS

The prometheus bin / rules are located on files/prometheus/ directory under pigsty home.

While the main config file is located on roles/infra/templates/prometheus/prometheus.yml.j2 and rendered to /etc/prometheus/prometheus.yml on infra nodes.

# /etc/prometheus/

# ^-----prometheus.yml # prometheus main config file

# ^-----@bin # util scripts: check,reload,status,new

# ^-----@rules # record & alerting rules definition

# ^-----agent.yml # agent rules & alert

# ^-----infra.yml # infra rules & alert

# ^-----node.yml # node rules & alert

# ^-----pgsql.yml # pgsql rules & alert

# ^-----redis.yml # redis rules & alert

# ^-----minio.yml # minio rules & alert

# ^-----etcd.yml # etcd rules & alert

# ^-----mongo.yml # mongo rules & alert

# ^-----mysql.yml # mysql rules & alert (placeholder)

# ^-----@targets # file based service discovery targets definition

# ^-----@infra # infra static targets definition

# ^-----@node # nodes static targets definition

# ^-----@etcd # etcd static targets definition

# ^-----@minio # minio static targets definition

# ^-----@ping # blackbox ping targets definition

# ^-----@pgsql # pgsql static targets definition

# ^-----@pgrds # pgsql remote rds static targets

# ^-----@redis # redis static targets definition

# ^-----@mongo # mongo static targets definition

# ^-----@mysql # mysql static targets definition

# ^-----@ping # ping static target definition

# ^-----@patroni # patroni static target defintion (when ssl enabled)

# ^-----@..... # other targets

# /etc/alertmanager.yml # alertmanager main config file

# /etc/blackbox.yml # blackbox exporter main config file

Postgres FHS

The following parameters are related to the PostgreSQL database dir:

- pg_dbsu_home: Postgres default user’s home dir, default is

/var/lib/pgsql. - pg_bin_dir: Postgres binary dir, defaults to

/usr/pgsql/bin/. - pg_data: Postgres database dir, default is

/pg/data. - pg_fs_main: Postgres main data disk mount point, default is

/data. - pg_fs_bkup: Postgres backup disk mount point, default is

/data/backups(used when using local backup repo).

#--------------------------------------------------------------#

# Create Directory

#--------------------------------------------------------------#

# assumption:

# {{ pg_fs_main }} for main data , default: `/data` [fast ssd]

# {{ pg_fs_bkup }} for backup data , default: `/data/backups` [cheap hdd]

#--------------------------------------------------------------#

# default variable:

# pg_fs_main = /data fast ssd

# pg_fs_bkup = /data/backups cheap hdd (optional)

#

# /pg -> /data/postgres/pg-test-17 (soft link)

# /pg/data -> /data/postgres/pg-test-17/data

#--------------------------------------------------------------#

- name: create postgresql directories

tags: pg_dir

become: yes

block:

- name: make main and backup data dir

file: path={{ item }} state=directory owner=root mode=0777

with_items:

- "{{ pg_fs_main }}"

- "{{ pg_fs_bkup }}"

# pg_cluster_dir: "{{ pg_fs_main }}/postgres/{{ pg_cluster }}-{{ pg_version }}"

- name: create postgres directories

file: path={{ item }} state=directory owner={{ pg_dbsu }} group=postgres mode=0700

with_items:

- "{{ pg_fs_main }}/postgres"

- "{{ pg_cluster_dir }}"

- "{{ pg_cluster_dir }}/bin"

- "{{ pg_cluster_dir }}/log"

- "{{ pg_cluster_dir }}/tmp"

- "{{ pg_cluster_dir }}/cert"

- "{{ pg_cluster_dir }}/conf"

- "{{ pg_cluster_dir }}/data"

- "{{ pg_cluster_dir }}/meta"

- "{{ pg_cluster_dir }}/stat"

- "{{ pg_cluster_dir }}/spool"

- "{{ pg_cluster_dir }}/change"

- "{{ pg_backup_dir }}/backup"

Data FHS

# real dirs

{{ pg_fs_main }} /data # top level data directory, usually a SSD mountpoint

{{ pg_dir_main }} /data/postgres # contains postgres data

{{ pg_cluster_dir }} /data/postgres/pg-test-17 # contains cluster `pg-test` data (of version 17)

/data/postgres/pg-test-17/bin # bin scripts

/data/postgres/pg-test-17/log # logs: postgres/pgbouncer/patroni/pgbackrest

/data/postgres/pg-test-17/tmp # tmp, sql files, rendered results

/data/postgres/pg-test-17/cert # postgres server certificates

/data/postgres/pg-test-17/conf # patroni config, links to related config

/data/postgres/pg-test-17/data # main data directory

/data/postgres/pg-test-17/meta # identity information

/data/postgres/pg-test-17/stat # stats information, summary, log report

/data/postgres/pg-test-17/change # changing records

/data/postgres/pg-test-17/spool # spool directory for pgbackrest archive

/data/postgres/pg-test-17/backup # soft link to backup dir

{{ pg_fs_bkup }} /data/backups # could be a cheap & large HDD mountpoint

/data/backups/postgres/pg-test-15/backup # local backup repo path

# soft links

/pg -> /data/postgres/pg-test-17 # pg root link

/pg/data -> /data/postgres/pg-test-17/data # real data dir

/pg/backup -> /var/backups/postgres/pg-test-17/backup # base backup

Binary FHS

On EL releases, the default path for PostgreSQL binaries is:

/usr/pgsql-${pg_version}/

Pigsty will create a softlink /usr/pgsql to the currently installed version specified by pg_version.

/usr/pgsql -> /usr/pgsql-15

Therefore, the default pg_bin_dir will be /usr/pgsql/bin/, and this path is added to the PATH environment via /etc/profile.d/pgsql.sh.

export PATH="/usr/pgsql/bin:/pg/bin:$PATH"

export PGHOME=/usr/pgsql

export PGDATA=/pg/data

For Ubuntu / Debian, the default path for PostgreSQL binaries is:

/usr/lib/postgresql/${pg_version}/bin

Pgbouncer FHS

Pgbouncer is run using the Postgres user, and the config file is located in /etc/pgbouncer. The config file includes.

pgbouncer.ini: pgbouncer main configdatabase.txt: pgbouncer database listuserlist.txt: pgbouncer user listuseropts.txt: pgbouncer user options (user-level parameter overrides)pgb_hba.conf: lists the access privileges of the connection pool users

Redis FHS

Pigsty provides essential support for Redis deployment and monitoring.

Redis binaries are installed in /bin/ using RPM-packages or copied binaries, including:

redis-server

redis-server

redis-cli

redis-sentinel

redis-check-rdb

redis-check-aof

redis-benchmark

/usr/libexec/redis-shutdown

For a Redis instance named redis-test-1-6379, the resources associated with it are shown below:

/usr/lib/systemd/system/redis-test-1-6379.service # Services ('/lib/systemd' in debian)

/etc/redis/redis-test-1-6379.conf # Config

/data/redis/redis-test-1-6379 # Database Catalog

/data/redis/redis-test-1-6379/redis-test-1-6379.rdb # RDB File

/data/redis/redis-test-1-6379/redis-test-1-6379.aof # AOF file

/var/log/redis/redis-test-1-6379.log # Log

/var/run/redis/redis-test-1-6379.pid # PID

For Ubuntu / Debian, the default systemd service dir is /lib/systemd/system/ instead of /usr/lib/systemd/system/.

5 - Comparison

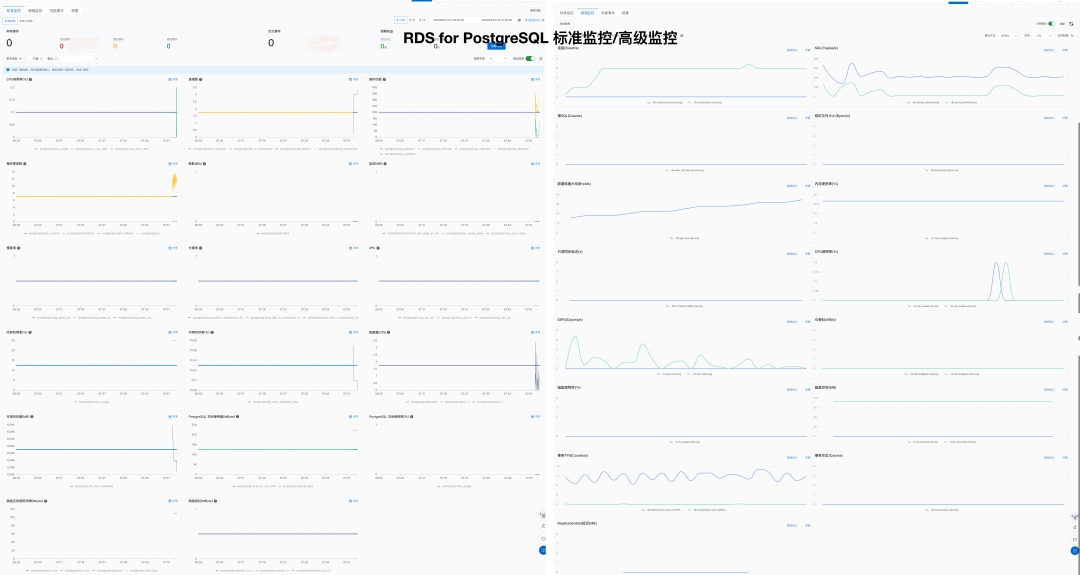

Comparing to RDS

Pigsty is an AGPLv3-licensed, local-first RDS alternative that can be deployed on your own physical machines/virtual machines, or on cloud servers.

Therefore, we chose the world’s leading Amazon Cloud AWS RDS for PostgreSQL and China’s market leader Alibaba Cloud RDS for PostgreSQL as benchmarks.

Both Alibaba Cloud RDS and AWS RDS are proprietary cloud database services, offered only on the public cloud through a leasing model. The following comparison is based on the latest PostgreSQL 16 main branch version, with the comparison cut-off date being February 2024.

Features

| Item | Pigsty | Aliyun RDS | AWS RDS |

|---|---|---|---|

| Major Version | 12 - 17 | 12 - 17 | 12 - 17 |

| Read on Standby | Of course | Not Readable | Not Readable |

| Separate R & W | By Port | Paid Proxy | Paid Proxy |

| Offline Instance | Yes | Not Available | Not Available |

| Standby Cluster | Yes | Multi-AZ | Multi-AZ |

| Delayed Instance | Yes | Not Available | Not Available |

| Load Balancer | HAProxy / LVS | Paid ELB | Paid ELB |

| Connection Pooling | Pgbouncer | Paid Proxy | Paid RDS Proxy |

| High Availability | Patroni / etcd | HA Version Only | HA Version Only |

| Point-in-Time Recovery | pgBackRest / MinIO | Yes | Yes |

| Monitoring Metrics | Prometheus / Exporter | About 9 Metrics | About 99 Metrics |

| Logging Collector | Loki / Promtail | Yes | Yes |

| Dashboards | Grafana / Echarts | Basic Support | Basic Support |

| Alerts | AlterManager | Basic Support | Basic Support |

Extensions

Here are some important extensions in the PostgreSQL ecosystem. The comparison is base on PostgreSQL 16 and complete on 2024-02-29:

| Category | Pigsty | Aliyun RDS PG | AWS RDS PG |

|---|---|---|---|

| Add Extension | Free to Install | Not Allowed | Not Allowed |

| Geo Spatial | PostGIS 3.4.2 | PostGIS 3.3.4 | PostGIS 3.4.1 |

| Time Series | TimescaleDB 2.14.2 | ||

| Distributive | Citus 12.1 | ||

| AI / ML | PostgresML 2.8.1 | ||

| Columnar | Hydra 1.1.1 | ||

| Vector | PGVector 0.6 | pase 0.0.1 | PGVector 0.6 |

| Sparse Vector | PG Sparse 0.5.6 | ||

| Full-Text Search | pg_bm25 0.5.6 |

||

| Graph | Apache AGE 1.5.0 | ||

| GraphQL | PG GraphQL 1.5.0 | ||

| Message Queue | pgq 3.5.0 | ||

| OLAP | pg_analytics 0.5.6 | ||

| DuckDB | duckdb_fdw 1.1 | ||

| CDC | wal2json 2.5.3 | wal2json 2.5 | |

| Bloat Control | pg_repack 1.5.0 | pg_repack 1.4.8 | pg_repack 1.5.0 |

| Point Cloud | PG PointCloud 1.2.5 | Ganos PointCloud 6.1 |

AWS RDS 扩展

AWS RDS for PostgreSQL 16 可用扩展(已刨除PG自带扩展)

| name | pg16 | pg15 | pg14 | pg13 | pg12 | pg11 | pg10 |

|---|---|---|---|---|---|---|---|

| amcheck | 1.3 | 1.3 | 1.3 | 1.2 | 1.2 | yes | 1 |

| auto_explain | yes | yes | yes | yes | yes | yes | yes |

| autoinc | 1 | 1 | 1 | 1 | null | null | null |

| bloom | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| bool_plperl | 1 | 1 | 1 | 1 | null | null | null |

| btree_gin | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 | 1.2 |

| btree_gist | 1.7 | 1.7 | 1.6 | 1.5 | 1.5 | 1.5 | 1.5 |

| citext | 1.6 | 1.6 | 1.6 | 1.6 | 1.6 | 1.5 | 1.4 |

| cube | 1.5 | 1.5 | 1.5 | 1.4 | 1.4 | 1.4 | 1.2 |

| dblink | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 |

| dict_int | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| dict_xsyn | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| earthdistance | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

| fuzzystrmatch | 1.2 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

| hstore | 1.8 | 1.8 | 1.8 | 1.7 | 1.6 | 1.5 | 1.4 |

| hstore_plperl | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| insert_username | 1 | 1 | 1 | 1 | null | null | null |

| intagg | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

| intarray | 1.5 | 1.5 | 1.5 | 1.3 | 1.2 | 1.2 | 1.2 |

| isn | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.1 |

| jsonb_plperl | 1 | 1 | 1 | 1 | 1 | null | null |

| lo | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

| ltree | 1.2 | 1.2 | 1.2 | 1.2 | 1.1 | 1.1 | 1.1 |

| moddatetime | 1 | 1 | 1 | 1 | null | null | null |

| old_snapshot | 1 | 1 | 1 | null | null | null | null |

| pageinspect | 1.12 | 1.11 | 1.9 | 1.8 | 1.7 | 1.7 | 1.6 |

| pg_buffercache | 1.4 | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 |

| pg_freespacemap | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 |

| pg_prewarm | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.1 |

| pg_stat_statements | 1.1 | 1.1 | 1.9 | 1.8 | 1.7 | 1.6 | 1.6 |

| pg_trgm | 1.6 | 1.6 | 1.6 | 1.5 | 1.4 | 1.4 | 1.3 |

| pg_visibility | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 |

| pg_walinspect | 1.1 | 1 | null | null | null | null | null |

| pgcrypto | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 |

| pgrowlocks | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 |

| pgstattuple | 1.5 | 1.5 | 1.5 | 1.5 | 1.5 | 1.5 | 1.5 |

| plperl | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| plpgsql | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| pltcl | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| postgres_fdw | 1.1 | 1.1 | 1.1 | 1 | 1 | 1 | 1 |

| refint | 1 | 1 | 1 | 1 | null | null | null |

| seg | 1.4 | 1.4 | 1.4 | 1.3 | 1.3 | 1.3 | 1.1 |

| sslinfo | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 |

| tablefunc | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| tcn | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| tsm_system_rows | 1 | 1 | 1 | 1 | 1 | 1 | 1.1 |

| tsm_system_time | 1 | 1 | 1 | 1 | 1 | 1 | 1.1 |

| unaccent | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

| uuid-ossp | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

Aliyun Extensions

阿里云 RDS for PostgreSQL 16 可用扩展(已刨除PG自带扩展)

| name | pg16 | pg15 | pg14 | pg13 | pg12 | pg11 | pg10 |

|---|---|---|---|---|---|---|---|

| bloom | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| btree_gin | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 | 1.2 |

| btree_gist | 1.7 | 1.7 | 1.6 | 1.5 | 1.5 | 1.5 | 1.5 |

| citext | 1.6 | 1.6 | 1.6 | 1.6 | 1.6 | 1.5 | 1.4 |

| cube | 1.5 | 1.5 | 1.5 | 1.4 | 1.4 | 1.4 | 1.2 |

| dblink | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 |

| dict_int | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| earthdistance | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

| fuzzystrmatch | 1.2 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

| hstore | 1.8 | 1.8 | 1.8 | 1.7 | 1.6 | 1.5 | 1.4 |

| intagg | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

| intarray | 1.5 | 1.5 | 1.5 | 1.3 | 1.2 | 1.2 | 1.2 |

| isn | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.1 |

| ltree | 1.2 | 1.2 | 1.2 | 1.2 | 1.1 | 1.1 | 1.1 |

| pg_buffercache | 1.4 | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 |

| pg_freespacemap | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 |

| pg_prewarm | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.1 |

| pg_stat_statements | 1.1 | 1.1 | 1.9 | 1.8 | 1.7 | 1.6 | 1.6 |

| pg_trgm | 1.6 | 1.6 | 1.6 | 1.5 | 1.4 | 1.4 | 1.3 |

| pgcrypto | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 | 1.3 |

| pgrowlocks | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 |

| pgstattuple | 1.5 | 1.5 | 1.5 | 1.5 | 1.5 | 1.5 | 1.5 |

| plperl | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| plpgsql | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| pltcl | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| postgres_fdw | 1.1 | 1.1 | 1.1 | 1 | 1 | 1 | 1 |

| sslinfo | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 | 1.2 |

| tablefunc | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| tsm_system_rows | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| tsm_system_time | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| unaccent | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

| uuid-ossp | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

| xml2 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

Performance

| Metric | Pigsty | Aliyun RDS | AWS RDS |

|---|---|---|---|

| Best Performance | PGTPC on NVME SSD evaluation sysbench oltp_rw | RDS PG Performance Whitepaper sysbench oltp scenario per-core QPS 4000 ~ 8000 | |

| Storage Specs: Maximum Capacity | 32TB / NVME SSD | 32 TB / ESSD PL3 | 64 TB / io2 EBS Block Express |

| Storage Specs: Maximum IOPS | 4K random read: up to 3M, random write 2000~350K | 4K random read: up to 1M | 16K random IOPS: 256K |

| Storage Specs: Maximum Latency | 4K random read: 75µs, random write 15µs | 4K random read: 200µs | 500µs / inferred for 16K random IO |

| Storage Specs: Maximum Reliability | UBER < 1e-18, equivalent to 18 nines MTBF: 2 million hours 5DWPD, for three years | Reliability 9 nines, equivalent to UBER 1e-9 Storage and Data Reliability | Durability: 99.999%, five nines (0.001% annual failure rate) io2 details |

| Storage Specs: Maximum Cost | 31.5 ¥/TB·month ( 5-year warranty amortized / 3.2T / enterprise-grade / MLC ) | 3200¥/TB·month (List price 6400¥, monthly package 4000¥) 3-year prepay total 50% off for this price | 1900 ¥/TB·month for using the maximum specs 65536GB / 256K IOPS maximum discount |

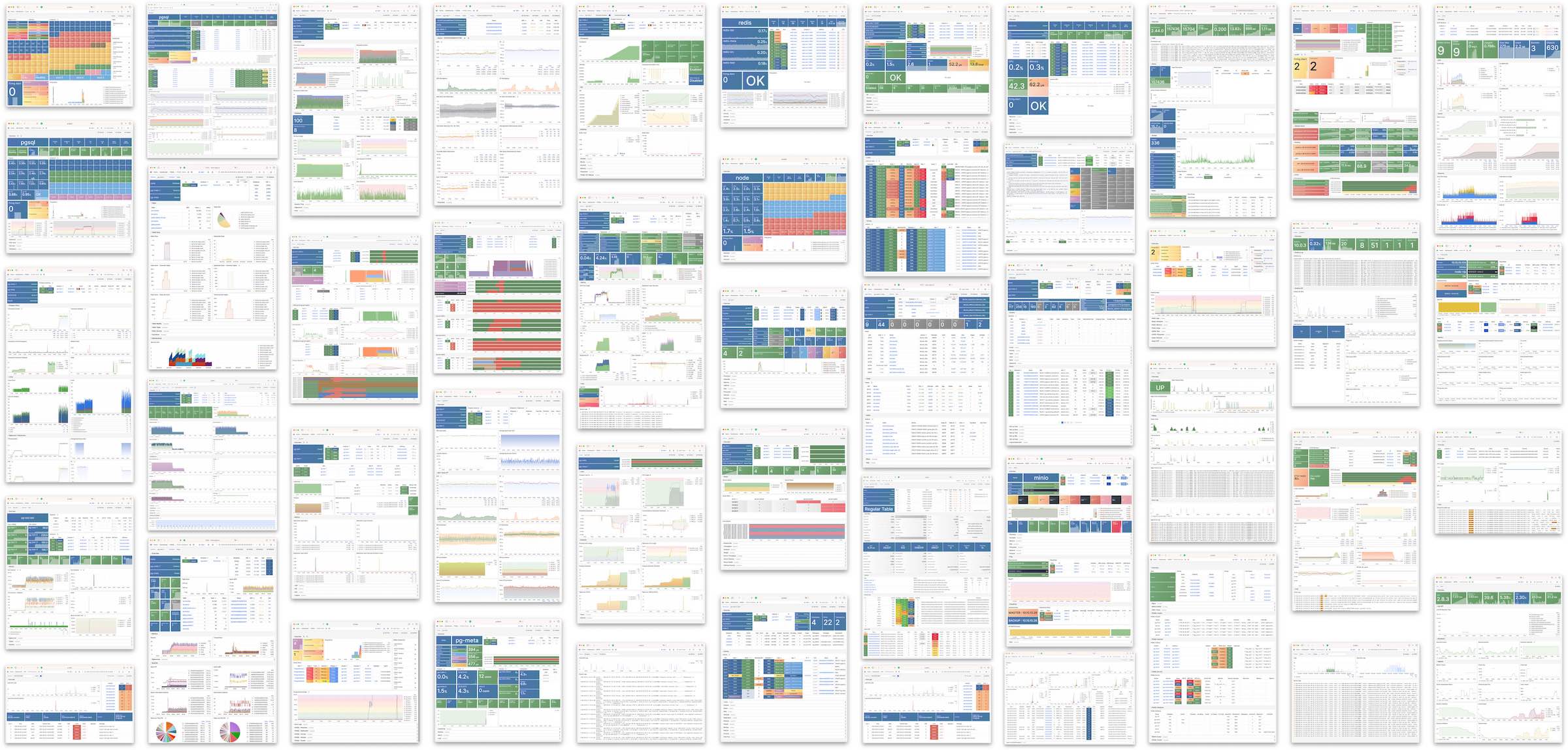

Observability

Pigsty offers nearly 3000 monitoring metrics, providing over 50 monitoring dashboards, covering database monitoring, host monitoring, connection pool monitoring, load balancing monitoring, etc., offering users an unparalleled observability experience.

Pigsty offers 638 PostgreSQL-related monitoring metrics, while AWS RDS only has 99, and Aliyun RDS has merely single-digit metrics:

Additionally, there are some projects that offer the capability to monitor PostgreSQL, but they are relatively basic and simplistic:

- pgwatch: 123 Metrics

- pgmonitor : 156 Metrics

- datadog : 69 Metrics

- pgDash

- ClusterControl

- pganalyze

- Aliyun RDS : 8 Metrics

- AWS RDS : 99 Metrics

- Azure RDS

Maintainability

| ** Metric** | Pigsty | Aliyun RDS | AWS RDS |

|---|---|---|---|

| System Usability | Simple | Simple | Simple |

| Configuration Management | Configuration file / CMDB based on Ansible Inventory | Can use Terraform | Can use Terraform |

| Change Method | Idempotent playbooks based on Ansible Playbook | Operations via console | Operations via console |

| Parameter Tuning | Automatically adapts based on node with four preset templates: OLTP, OLAP, TINY, CRIT | ||

| Infra as Code | Native support | Can use Terraform | Can use Terraform |

| Customizable Parameters | Pigsty Parameters 283 items | ||

| Service and Support | Commercial subscription support available | After-sales ticket support provided | After-sales ticket support provided |

| No Internet Deployment | Possible offline installation and deployment | N/A | N/A |

| Database Migration | playbooks for zero-downtime migration from existing Postgres into Pigsty | Provides cloud migration assistance Aliyun RDS Data Synchronization |

Cost

Experience shows that the per-unit cost of hardware and software resources for RDS is 5 to 15 times that of self-built solutions, with the rent-to-own ratio typically being one month. For more details, please refer to Cost Analysis.

| Factor | Metric | Pigsty | Aliyun RDS | AWS RDS |

|---|---|---|---|---|

| Cost | Software License/Service Fees | Free, hardware about 20 - 40 ¥/core·month | 200 ~ 400 ¥/core·month | 400 ~ 1300 ¥/core·month |

| Service Support Fees | Service about 100 ¥/ core·month | Included in RDS costs |

Other Vendors

Kubernetes Operators

Pigsty refuse to run database inside kubernetes, but if you wish to do so, there are other options:

- PGO

- StackGres

- CloudNativePG

- TemboOperator

- PostgresOperator

- PerconaOperator

- Kubegres

- KubeDB

- KubeBlocks

Reference:

6 - Cost Analysis

Cost Reference

| EC2 | vCPU-Month | RDS | vCPU-Month |

|---|---|---|---|

| DHH’s self-hosted core-month price (192C 384G) | 25.32 | Junior open-source DBA reference salary | 15K/person-month |

| IDC self-hosted data center (exclusive physical machine: 64C384G) | 19.53 | Intermediate open-source DBA reference salary | 30K/person-month |

| IDC self-hosted data center (container, oversold 500%) | 7 | Senior open-source DBA reference salary | 60K/person-month |

| UCloud Elastic Virtual Machine (8C16G, oversold) | 25 | ORACLE database license | 10000 |

| Alibaba Cloud Elastic Server 2x memory (exclusive without overselling) | 107 | Alibaba Cloud RDS PG 2x memory (exclusive) | 260 |

| Alibaba Cloud Elastic Server 4x memory (exclusive without overselling) | 138 | Alibaba Cloud RDS PG 4x memory (exclusive) | 320 |

| Alibaba Cloud Elastic Server 8x memory (exclusive without overselling) | 180 | Alibaba Cloud RDS PG 8x memory (exclusive) | 410 |

| AWS C5D.METAL 96C 200G (monthly without upfront) | 100 | AWS RDS PostgreSQL db.T2 (2x) | 440 |

For instance, using RDS for PostgreSQL on AWS, the price for a 64C / 256GB db.m5.16xlarge RDS for one month is $25,817, which is equivalent to about 180,000 yuan per month. The monthly rent is enough for you to buy two servers with even better performance and set them up on your own. The rent-to-buy ratio doesn’t even last a month; renting for just over ten days is enough to buy the whole server for yourself.

| Payment Model | Price | Cost Per Year (¥10k) |

|---|---|---|

| Self-hosted IDC (Single Physical Server) | ¥75k / 5 years | 1.5 |

| Self-hosted IDC (2-3 Server HA Cluster) | ¥150k / 5 years | 3.0 ~ 4.5 |

| Alibaba Cloud RDS (On-demand) | ¥87.36/hour | 76.5 |

| Alibaba Cloud RDS (Monthly) | ¥42k / month | 50 |

| Alibaba Cloud RDS (Yearly, 15% off) | ¥425,095 / year | 42.5 |

| Alibaba Cloud RDS (3-year, 50% off) | ¥750,168 / 3 years | 25 |

| AWS (On-demand) | $25,817 / month | 217 |

| AWS (1-year, no upfront) | $22,827 / month | 191.7 |

| AWS (3-year, full upfront) | $120k + $17.5k/month | 175 |

| AWS China/Ningxia (On-demand) | ¥197,489 / month | 237 |

| AWS China/Ningxia (1-year, no upfront) | ¥143,176 / month | 171 |

| AWS China/Ningxia (3-year, full upfront) | ¥647k + ¥116k/month | 160.6 |

Comparing the costs of self-hosting versus using a cloud database:

| Method | Cost Per Year (¥10k) |

|---|---|

| Self-hosted Servers 64C / 384G / 3.2TB NVME SSD 660K IOPS (2-3 servers) | 3.0 ~ 4.5 |

| Alibaba Cloud RDS PG High-Availability pg.x4m.8xlarge.2c, 64C / 256GB / 3.2TB ESSD PL3 | 25 ~ 50 |

| AWS RDS PG High-Availability db.m5.16xlarge, 64C / 256GB / 3.2TB io1 x 80k IOPS | 160 ~ 217 |