This is the multi-page printable view of this section. Click here to print.

PostgreSQL

- From AGPL to Apache: Why I Changed Pigsty's License

- Git for Data: Instant PostgreSQL Database Cloning

- Why PostgreSQL Will Dominate the AI Era

- Forging a China-Rooted, Global PostgreSQL Distro

- PG Extension Cloud: Unlocking PostgreSQL’s Entire Ecosystem

- The PostgreSQL 'Supply Cut' and Trust Issues in Software Supply Chain

- PostgreSQL Dominates Database World, but Who Will Devour PG?

- PostgreSQL Has Dominated the Database World

- PGDG Cuts Off Mirror Sync Channel

- Postgres Extension Day - See You There!

- OrioleDB is Coming! 4x Performance, Eliminates Pain Points, Storage-Compute Separation

- OpenHalo: MySQL Wire-Compatible PostgreSQL is Here!

- PGFS: Using Database as a Filesystem

- PostgreSQL Ecosystem Frontier Developments

- Pig, The Postgres Extension Wizard

- Don't Upgrade! Released and Immediately Pulled - Even PostgreSQL Isn't Immune to Epic Fails

- PostgreSQL 12 End-of-Life, PG 17 Takes the Throne

- The ideal way to deliver PostgreSQL Extensions

- PostgreSQL Convention 2024

- PostgreSQL 17 Released: No More Pretending!

- Can PostgreSQL Replace Microsoft SQL Server?

- Whoever Integrates DuckDB Best Wins the OLAP World

- StackOverflow 2024 Survey: PostgreSQL Has Gone Completely Berserk

- Self-Hosting Dify with PG, PGVector, and Pigsty

- PGCon.Dev 2024, The conf that shutdown PG for a week

- PostgreSQL 17 Beta1 Released!

- Why PostgreSQL is the Future Standard?

- Will PostgreSQL Change Its License?

- Postgres is eating the database world

- Technical Minimalism: Just Use PostgreSQL for Everything

- New PostgreSQL Ecosystem Player: ParadeDB

- PostgreSQL's Impressive Scalability

- PostgreSQL Outlook for 2024

- PostgreSQL Wins 2024 Database of the Year Award! (Fifth Time)

- PostgreSQL Macro Query Optimization with pg_stat_statements

- FerretDB: PostgreSQL Disguised as MongoDB

- How to Use pg_filedump for Data Recovery?

- Vector is the New JSON

- PostgreSQL, The most successful database

- AI Large Models and Vector Database PGVector

- How Powerful is PostgreSQL Really?

- Why PostgreSQL is the Most Successful Database?

- Ready-to-Use PostgreSQL Distribution: Pigsty

- Why Does PostgreSQL Have a Bright Future?

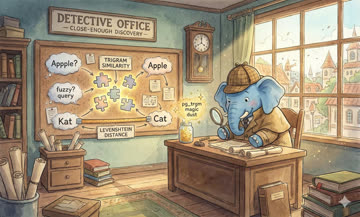

- Implementing Advanced Fuzzy Search

- Localization and Collation Rules in PostgreSQL

- PG Replica Identity Explained

- PostgreSQL Logical Replication Deep Dive

- A Methodology for Diagnosing PostgreSQL Slow Queries

- Incident-Report: Patroni Failure Due to Time Travel

- Online Primary Key Column Type Change

- Golden Monitoring Metrics: Errors, Latency, Throughput, Saturation

- Database Cluster Management Concepts and Entity Naming Conventions

- PostgreSQL's KPI

- Online PostgreSQL Column Type Migration

- Frontend-Backend Communication Wire Protocol

- Transaction Isolation Level Considerations

- Incident: PostgreSQL Extension Installation Causes Connection Failure

- CDC Change Data Capture Mechanisms

- Locks in PostgreSQL

- O(n2) Complexity of GIN Search

- PostgreSQL Common Replication Topology Plans

- Warm Standby: Using pg_receivewal

- Incident-Report: Connection-Pool Contamination Caused by pg_dump

- PostgreSQL Data Page Corruption Repair

- Relation Bloat Monitoring and Management

- Getting Started with PipelineDB

- TimescaleDB Quick Start

- Incident-Report: Integer Overflow from Rapid Sequence Number Consumption

- Incident-Report: PostgreSQL Transaction ID Wraparound

- GeoIP Geographic Reverse Lookup Optimization

- PostgreSQL Trigger Usage Considerations

- PostgreSQL Development Convention (2018 Edition)

- What Are PostgreSQL's Advantages?

- Efficient Administrative Region Lookup with PostGIS

- KNN Ultimate Optimization: From RDS to PostGIS

- Monitoring Table Size in PostgreSQL

- PgAdmin Installation and Configuration

- Incident-Report: Uneven Load Avalanche

- Bash and psql Tips

- Distinct On: Remove Duplicate Data

- Function Volatility Classification Levels

- Implementing Mutual Exclusion Constraints with Exclude

- PostgreSQL Routine Maintenance

- Backup and Recovery Methods Overview

- PgBackRest2 Documentation

- Pgbouncer Quick Start

- PostgreSQL Server Log Regular Configuration

- Testing Disk Performance with FIO

- Using sysbench to Test PostgreSQL Performance

- Changing Engines Mid-Flight — PostgreSQL Zero-Downtime Data Migration

- Finding Unused Indexes

- Batch Configure SSH Passwordless Login

- Wireshark Packet Capture Protocol Analysis

- The Versatile file_fdw — Reading System Information from Your Database

- Common Linux Statistics CLI Tools

- Installing PostGIS from Source

- Go Database Tutorial: database/sql

- Implementing Cache Synchronization with Go and PostgreSQL

- Auditing Data Changes with Triggers

- Building an ItemCF Recommender in Pure SQL

- UUID Properties, Principles and Applications

- PostgreSQL MongoFDW Installation and Deployment

From AGPL to Apache: Why I Changed Pigsty's License

Pigsty switched from AGPLv3 to Apache 2.0. Aren’t you worried about freeloaders? Freeloaders welcome — if you want to become the Debian of databases, a permissive license is table stakes. Read more

Git for Data: Instant PostgreSQL Database Cloning

How to instantly clone a massive PostgreSQL database without consuming extra storage? PostgreSQL 18 and XFS can spark some serious magic. Read more

Why PostgreSQL Will Dominate the AI Era

Context window economics, the polyglot persistence problem, and the triumph of zero-glue architecture make PostgreSQL the database king of the AI era. Read more

Forging a China-Rooted, Global PostgreSQL Distro

PostgreSQL already won. The real battle is the distro layer. Will Chinese developers watch from the sideline or craft a PG “Ubuntu” for the world? Read more

PG Extension Cloud: Unlocking PostgreSQL’s Entire Ecosystem

Free, open, no VPN. Install PostgreSQL and 431 extensions on 14 Linux distros × 6 PG versions via native RPM/DEB—and a tiny CLI. Read more

The PostgreSQL 'Supply Cut' and Trust Issues in Software Supply Chain

PostgreSQL official repos cut off global mirror sync channels, open-source binaries supply disrupted, revealing the true colors of various database and cloud vendors. Read more

PostgreSQL Dominates Database World, but Who Will Devour PG?

The same forces that once led MongoDB and MySQL toward closure are now at work in the PostgreSQL ecosystem. The PG world needs a distribution that represents “software freedom” values. Read more

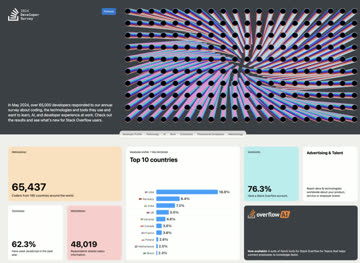

PostgreSQL Has Dominated the Database World

The 2025 SO global developer survey results are fresh out, and PostgreSQL has become the most popular, most loved, and most wanted database for the third consecutive year. Nothing can stop PostgreSQL from consolidating the entire database world! Read more

PGDG Cuts Off Mirror Sync Channel

PGDG cuts off FTP rsync sync channels, global mirror sites universally disconnected - this time they really strangled global users’ supply chain. Read more

Postgres Extension Day - See You There!

The annual PostgreSQL developer conference will be held in Montreal in May. Like the first PG Con.Dev, there’s also an additional dedicated event - Postgres Extensions Day Read more

OrioleDB is Coming! 4x Performance, Eliminates Pain Points, Storage-Compute Separation

A PG kernel fork acquired by Supabase, claiming to solve PG’s XID wraparound problem, eliminate table bloat issues, improve performance by 4x, and support cloud-native storage. Now part of the Pigsty family. Read more

OpenHalo: MySQL Wire-Compatible PostgreSQL is Here!

What? PostgreSQL can now be accessed using MySQL clients? That’s right, openHalo, which was open-sourced on April Fool’s Day, provides exactly this capability and has now joined the Pigsty kernel family. Read more

PGFS: Using Database as a Filesystem

Leverage JuiceFS to turn PostgreSQL into a filesystem with PITR capabilities! Read more

PostgreSQL Ecosystem Frontier Developments

Sharing some interesting recent developments in the PG ecosystem. Read more

Pig, The Postgres Extension Wizard

Why would we need yet another package manager for PostgreSQL & extensions? Read more

Don't Upgrade! Released and Immediately Pulled - Even PostgreSQL Isn't Immune to Epic Fails

Never deploy on Friday, or you’ll be working all weekend! PostgreSQL minor releases were pulled on the day of release, requiring emergency rollback. Read more

PostgreSQL 12 End-of-Life, PG 17 Takes the Throne

PG17 achieved extension ecosystem adaptation in half the time of PG16, with 300 available extensions ready for production use. PG 12 officially exits support lifecycle. Read more

The ideal way to deliver PostgreSQL Extensions

PostgreSQL Is Eating the Database World through the power of extensibility. With 390 extensions powering PG, we may not say it’s invincible, but it’s definitely getting much closer. Read more

PostgreSQL Convention 2024

No rules, no standards. Some developer conventions for PostgreSQL 16. Read more

PostgreSQL 17 Released: No More Pretending!

PostgreSQL is now the world’s most advanced open-source database and has become the preferred open-source database for organizations of all sizes, matching or exceeding top commercial databases. Read more

Can PostgreSQL Replace Microsoft SQL Server?

PostgreSQL can directly replace Oracle, SQL Server, and MongoDB at the kernel level. Of course, the most thorough replacement is SQL Server - AWS’s Babelfish provides wire-protocol-level compatibility. Read more

Whoever Integrates DuckDB Best Wins the OLAP World

Just like the vector database extension race two years ago, the current PostgreSQL ecosystem extension competition has begun revolving around DuckDB. MotherDuck’s official entry into the PostgreSQL extension space undoubtedly signals that competition has entered white-hot territory. Read more

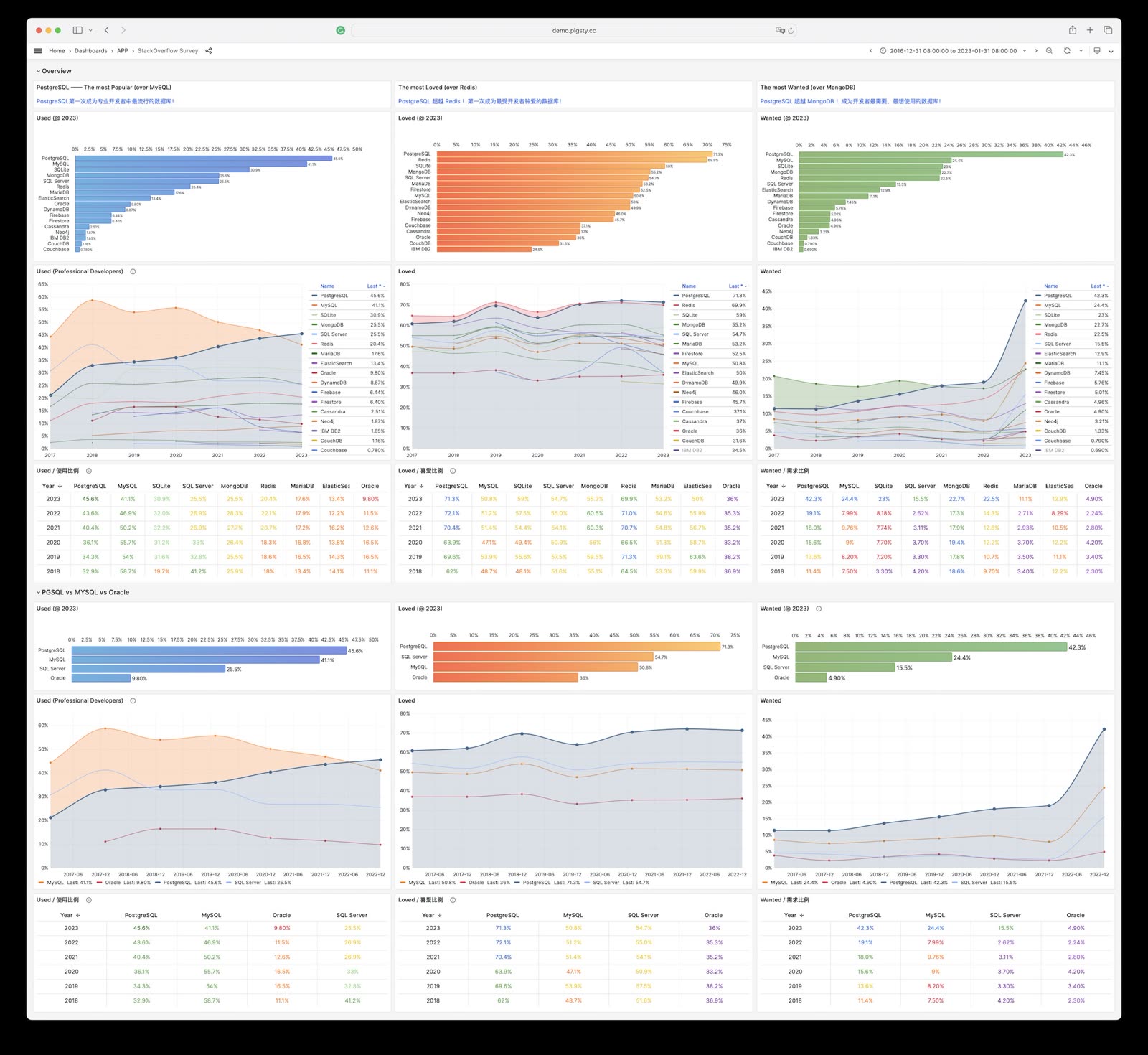

StackOverflow 2024 Survey: PostgreSQL Has Gone Completely Berserk

The 2024 StackOverflow Global Developer Survey results are fresh out, and PostgreSQL has become the most popular, most loved, and most wanted database globally for the second consecutive year. Nothing can stop PostgreSQL from devouring the entire database world anymore! Read more

Self-Hosting Dify with PG, PGVector, and Pigsty

Dify is an open-source LLM app development platform. This article explains how to self-host Dify using Pigsty. Read more

PGCon.Dev 2024, The conf that shutdown PG for a week

Experience & Feeling on the PGCon.Dev 2024 Read more

PostgreSQL 17 Beta1 Released!

The PostgreSQL Global Development Group announces PostgreSQL 17’s first Beta version is now available. This time, PostgreSQL has truly burst the toothpaste tube! Read more

Why PostgreSQL is the Future Standard?

Author: Ajay Kulkarni (TimescaleDB CEO) | Original: Why PostgreSQL Is the Bedrock for the Future of Data

One of the biggest trends in software development today is PostgreSQL becoming the de facto database standard. This article explains why. Read more

Will PostgreSQL Change Its License?

Author: Jonathan Katz (PostgreSQL Core Team) | Original: PostgreSQL Will Not Change Its License

PostgreSQL will not change its license. This article is a response from PostgreSQL core team members on this question. Read more

Postgres is eating the database world

PostgreSQL isn’t just a simple relational database; it’s a data management framework with the potential to engulf the entire database realm. The trend of “Using Postgres for Everything” is no longer limited to a few elite teams but is becoming a mainstream best practice.

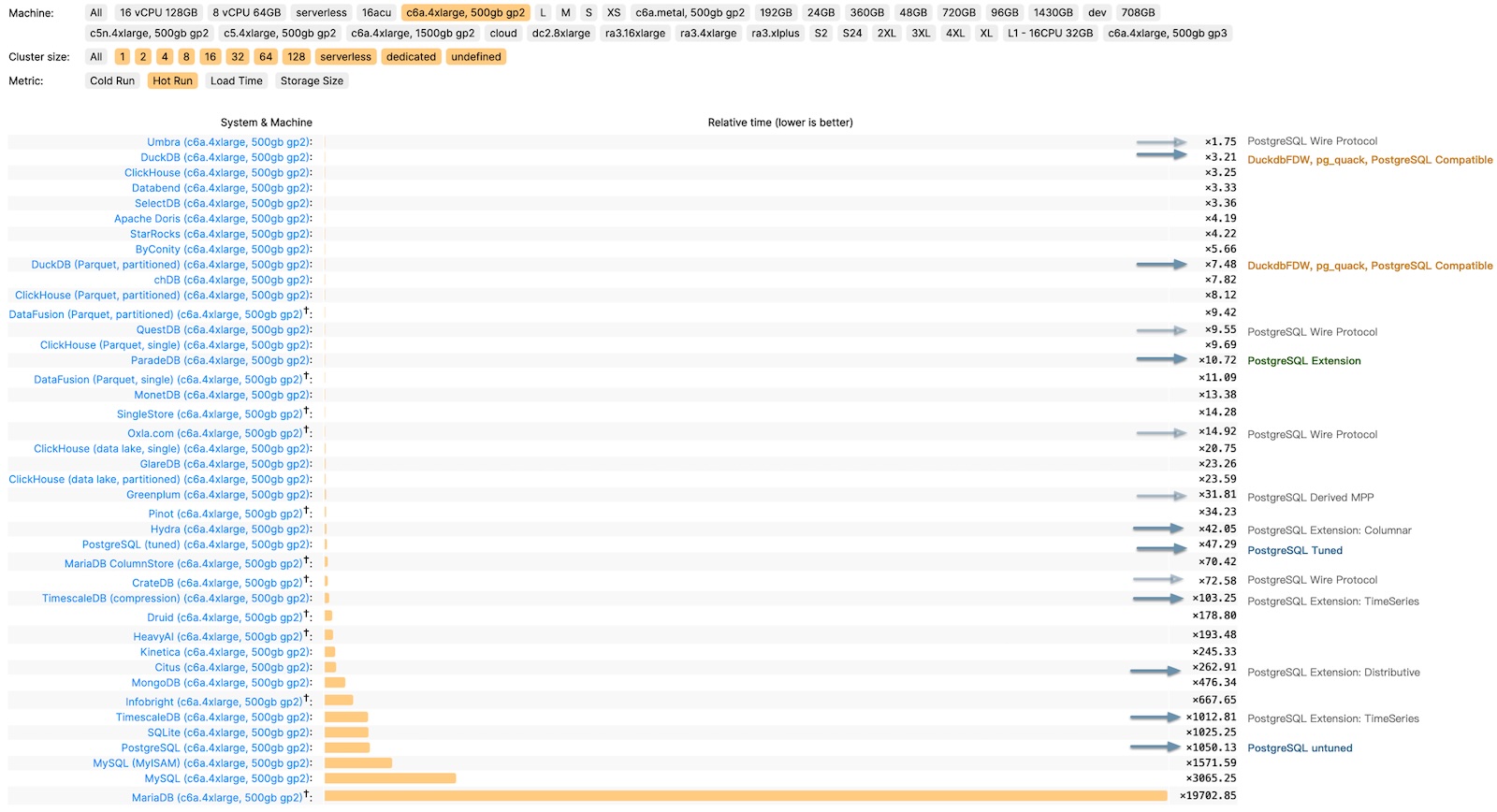

OLAP’s New Challenger

In a 2016 database meetup, I argued that a significant gap in the PostgreSQL ecosystem was the lack of a sufficiently good columnar storage engine for OLAP workloads. While PostgreSQL itself offers lots of analysis features, its performance in full-scale analysis on larger datasets doesn’t quite measure up to dedicated real-time data warehouses.

Consider ClickBench, an analytics performance benchmark, where we’ve documented the performance of PostgreSQL, its ecosystem extensions, and derivative databases. The untuned PostgreSQL performs poorly (x1050), but it can reach (x47) with optimization. Additionally, there are three analysis-related extensions: columnar store Hydra (x42), time-series TimescaleDB (x103), and distributed Citus (x262).

ClickBench c6a.4xlarge, 500gb gp2 results in relative time

This performance can’t be considered bad, especially compared to pure OLTP databases like MySQL and MariaDB (x3065, x19700); however, its third-tier performance is not “good enough,” lagging behind the first-tier OLAP components like Umbra, ClickHouse, Databend, SelectDB (x3~x4) by an order of magnitude. It’s a tough spot - not satisfying enough to use, but too good to discard.

However, the arrival of ParadeDB and DuckDB changed the game!

ParadeDB’s native PG extension pg_analytics achieves second-tier performance (x10), narrowing the gap to the top tier to just 3–4x. Given the additional benefits, this level of performance discrepancy is often acceptable - ACID, freshness and real-time data without ETL, no additional learning curve, no maintenance of separate services, not to mention its ElasticSearch grade full-text search capabilities.

DuckDB focuses on pure OLAP, pushing analysis performance to the extreme (x3.2) — excluding the academically focused, closed-source database Umbra, DuckDB is arguably the fastest for practical OLAP performance. It’s not a PG extension, but PostgreSQL can fully leverage DuckDB’s analysis performance boost as an embedded file database through projects like DuckDB FDW and pg_quack.

The emergence of ParadeDB and DuckDB propels PostgreSQL’s analysis capabilities to the top tier of OLAP, filling the last crucial gap in its analytic performance.

The Pendulum of Database Realm

The distinction between OLTP and OLAP didn’t exist at the inception of databases. The separation of OLAP data warehouses from databases emerged in the 1990s due to traditional OLTP databases struggling to support analytics scenarios’ query patterns and performance demands.

For a long time, best practice in data processing involved using MySQL/PostgreSQL for OLTP workloads and syncing data to specialized OLAP systems like Greenplum, ClickHouse, Doris, Snowflake, etc., through ETL processes.

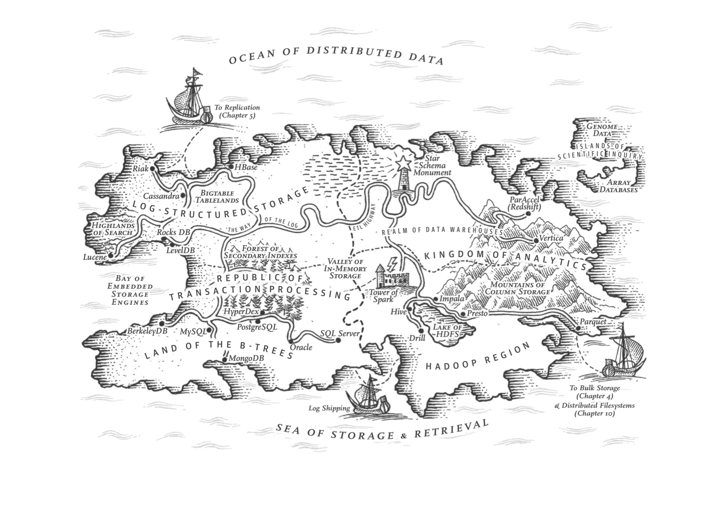

DDIA, Martin Kleppmann, ch3, The republic of OLTP & Kingdom of OLAP

Like many “specialized databases,” the strength of dedicated OLAP systems often lies in performance — achieving 1-3 orders of magnitude improvement over native PG or MySQL. The cost, however, is redundant data, excessive data movement, lack of agreement on data values among distributed components, extra labor expense for specialized skills, extra licensing costs, limited query language power, programmability and extensibility, limited tool integration, poor data integrity and availability compared with a complete DMBS.

However, as the saying goes, “What goes around comes around”. With hardware improving over thirty years following Moore’s Law, performance has increased exponentially while costs have plummeted. In 2024, a single x86 machine can have hundreds of cores (512 vCPU EPYC 9754x2), several TBs of RAM, a single NVMe SSD can hold up to 64TB, and a single all-flash rack can reach 2PB; object storage like S3 offers virtually unlimited storage.

Hardware advancements have solved the data volume and performance issue, while database software developments (PostgreSQL, ParadeDB, DuckDB) have addressed access method challenges. This puts the fundamental assumptions of the analytics sector — the so-called “big data” industry — under scrutiny.

As DuckDB’s manifesto "Big Data is Dead" suggests, the era of big data is over. Most people don’t have that much data, and most data is seldom queried. The frontier of big data recedes as hardware and software evolve, rendering “big data” unnecessary for 99% of scenarios.

If 99% of use cases can now be handled on a single machine with standalone DuckDB or PostgreSQL (and its replicas), what’s the point of using dedicated analytics components? If every smartphone can send and receive texts freely, what’s the point of pagers? (With the caveat that North American hospitals still use pagers, indicating that maybe less than 1% of scenarios might genuinely need “big data.”)

The shift in fundamental assumptions is steering the database world from a phase of diversification back to convergence, from a big bang to a mass extinction. In this process, a new era of unified, multi-modeled, super-converged databases will emerge, reuniting OLTP and OLAP. But who will lead this monumental task of reconsolidating the database field?

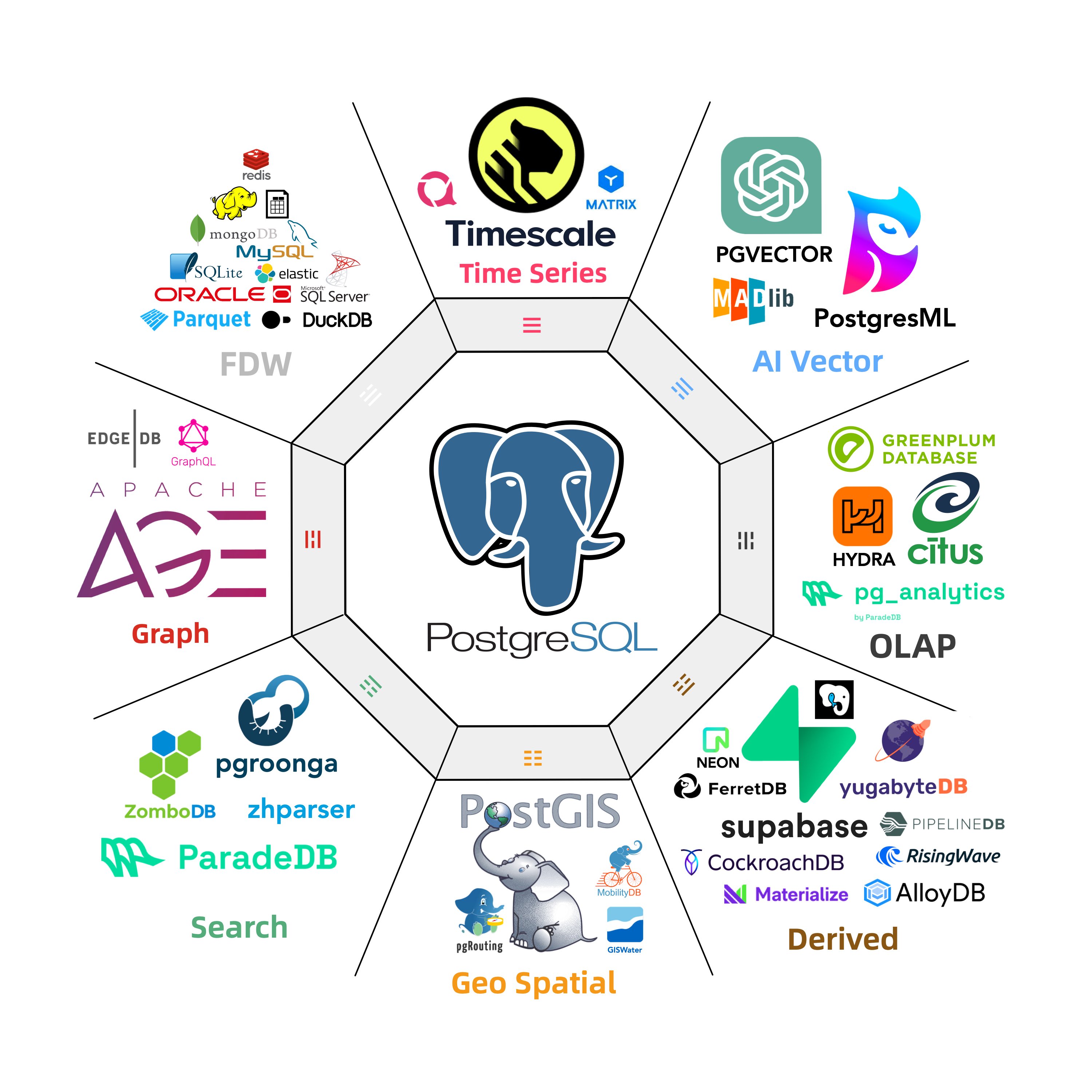

PostgreSQL: The Database World Eater

There are a plethora of niches in the database realm: time-series, geospatial, document, search, graph, vector databases, message queues, and object databases. PostgreSQL makes its presence felt across all these domains.

A case in point is the PostGIS extension, which sets the de facto standard in geospatial databases; the TimescaleDB extension awkwardly positions “generic” time-series databases; and the vector extension, PGVector, turns the dedicated vector database niche into a punchline.

This isn’t the first time; we’re witnessing it again in the oldest and largest subdomain: OLAP analytics. But PostgreSQL’s ambition doesn’t stop at OLAP; it’s eyeing the entire database world!

What makes PostgreSQL so capable? Sure, it’s advanced, but so is Oracle; it’s open-source, as is MySQL. PostgreSQL’s edge comes from being both advanced and open-source, allowing it to compete with Oracle/MySQL. But its true uniqueness lies in its extreme extensibility and thriving extension ecosystem.

TimescaleDB survey: what is the main reason you choose to use PostgreSQL

PostgreSQL isn’t just a relational database; it’s a data management framework capable of engulfing the entire database galaxy. Besides being open-source and advanced, its core competitiveness stems from extensibility, i.e., its infra’s reusability and extension’s composability.

The Magic of Extreme Extensibility

PostgreSQL allows users to develop extensions, leveraging the database’s common infra to deliver features at minimal cost. For instance, the vector database extension pgvector, with just several thousand lines of code, is negligible in complexity compared to PostgreSQL’s millions of lines. Yet, this “insignificant” extension achieves complete vector data types and indexing capabilities, outperforming lots of specialized vector databases.

Why? Because pgvector’s creators didn’t need to worry about the database’s general additional complexities: ACID, recovery, backup & PITR, high availability, access control, monitoring, deployment, 3rd-party ecosystem tools, client drivers, etc., which require millions of lines of code to solve well. They only focused on the essential complexity of their problem.

For example, ElasticSearch was developed on the Lucene search library, while the Rust ecosystem has an improved next-gen full-text search library, Tantivy, as a Lucene alternative. ParadeDB only needs to wrap and connect it to PostgreSQL’s interface to offer search services comparable to ElasticSearch. More importantly, it can stand on the shoulders of PostgreSQL, leveraging the entire PG ecosystem’s united strength (e.g., mixed searches with PG Vector) to “unfairly” compete with another dedicated database.

Pigsty has 440 extensions available. And there are 1000+ more in the ecosystem

The extensibility brings another huge advantage: the composability of extensions, allowing different extensions to work together, creating a synergistic effect where 1+1 » 2. For instance, TimescaleDB can be combined with PostGIS for spatio-temporal data support; the BM25 extension for full-text search can be combined with the PGVector extension, providing hybrid search capabilities.

Furthermore, the distributive extension Citus can transparently transform a standalone cluster into a horizontally partitioned distributed database cluster. This capability can be orthogonally combined with other features, making PostGIS a distributed geospatial database, PGVector a distributed vector database, ParadeDB a distributed full-text search database, and so on.

What’s more powerful is that extensions evolve independently, without the cumbersome need for main branch merges and coordination. This allows for scaling — PG’s extensibility lets numerous teams explore database possibilities in parallel, with all extensions being optional, not affecting the core functionality’s reliability. Those features that are mature and robust have the chance to be stably integrated into the main branch.

PostgreSQL achieves both foundational reliability and agile functionality through the magic of extreme extensibility, making it an outlier in the database world and changing the game rules of the database landscape.

Game Changer in the DB Arena

The emergence of PostgreSQL has shifted the paradigms in the database domain: Teams endeavoring to craft a “new database kernel” now face a formidable trial — how to stand out against the open-source, feature-rich Postgres. What’s their unique value proposition?

Until a revolutionary hardware breakthrough occurs, the advent of practical, new, general-purpose database kernels seems unlikely. No singular database can match the overall prowess of PG, bolstered by all its extensions — not even Oracle, given PG’s ace of being open-source and free.

A niche database product might carve out a space for itself if it can outperform PostgreSQL by an order of magnitude in specific aspects (typically performance). However, it usually doesn’t take long before the PostgreSQL ecosystem spawns open-source extension alternatives. Opting to develop a PG extension rather than a whole new database gives teams a crushing speed advantage in playing catch-up!

Following this logic, the PostgreSQL ecosystem is poised to snowball, accruing advantages and inevitably moving towards a monopoly, mirroring the Linux kernel’s status in server OS within a few years. Developer surveys and database trend reports confirm this trajectory.

PostgreSQL has long been the favorite database in HackerNews & StackOverflow. Many new open-source projects default to PostgreSQL as their primary, if not only, database choice. And many new-gen companies are going All in PostgreSQL.

As “Radical Simplicity: Just Use Postgres” says, Simplifying tech stacks, reducing components, accelerating development, lowering risks, and adding more features can be achieved by “Just Use Postgres.” Postgres can replace many backend technologies, including MySQL, Kafka, RabbitMQ, ElasticSearch, Mongo, and Redis, effortlessly serving millions of users. Just Use Postgres is no longer limited to a few elite teams but becoming a mainstream best practice.

What Else Can Be Done?

The endgame for the database domain seems predictable. But what can we do, and what should we do?

PostgreSQL is already a near-perfect database kernel for the vast majority of scenarios, making the idea of a kernel “bottleneck” absurd. Forks of PostgreSQL and MySQL that tout kernel modifications as selling points are essentially going nowhere.

This is similar to the situation with the Linux OS kernel today; despite the plethora of Linux distros, everyone opts for the same kernel. Forking the Linux kernel is seen as creating unnecessary difficulties, and the industry frowns upon it.

Accordingly, the main conflict is no longer the database kernel itself but two directions— database extensions and services! The former pertains to internal extensibility, while the latter relates to external composability. Much like the OS ecosystem, the competitive landscape will concentrate on database distributions. In the database domain, only those distributions centered around extensions and services stand a chance for ultimate success.

Kernel remains lukewarm, with MariaDB, the fork of MySQL’s parent, nearing delisting, while AWS, profiting from offering services and extensions on top of the free kernel, thrives. Investment has flowed into numerous PG ecosystem extensions and service distributions: Citus, TimescaleDB, Hydra, PostgresML, ParadeDB, FerretDB, StackGres, Aiven, Neon, Supabase, Tembo, PostgresAI, and our own PG distro — — Pigsty.

A dilemma within the PostgreSQL ecosystem is the independent evolution of many extensions and tools, lacking a unifier to synergize them. For instance, Hydra releases its own package and Docker image, and so does PostgresML, each distributing PostgreSQL images with their own extensions and only their own. These images and packages are far from comprehensive database services like AWS RDS.

Even service providers and ecosystem integrators like AWS fall short in front of numerous extensions, unable to include many due to various reasons (AGPLv3 license, security challenges with multi-tenancy), thus failing to leverage the synergistic amplification potential of PostgreSQL ecosystem extensions.

Extesion Category Pigsty RDS & PGDG AWS RDS PG Aliyun RDS PG Add Extension Free to Install Not Allowed Not Allowed Geo Spatial PostGIS 3.4.2 PostGIS 3.4.1 PostGIS 3.3.4 Time Series TimescaleDB 2.14.2 Distributive Citus 12.1 AI / ML PostgresML 2.8.1 Columnar Hydra 1.1.1 Vector PGVector 0.6 PGVector 0.6 pase 0.0.1 Sparse Vector PG Sparse 0.5.6 Full-Text Search pg_bm25 0.5.6 Graph Apache AGE 1.5.0 GraphQL PG GraphQL 1.5.0 Message Queue pgq 3.5.0 OLAP pg_analytics 0.5.6 DuckDB duckdb_fdw 1.1 CDC wal2json 2.5.3 wal2json 2.5 Bloat Control pg_repack 1.5.0 pg_repack 1.5.0 pg_repack 1.4.8 Point Cloud PG PointCloud 1.2.5 Ganos PointCloud 6.1 Many important extensions are not available on Cloud RDS (PG 16, 2024-02-29)

Extensions are the soul of PostgreSQL. A Postgres without the freedom to use extensions is like cooking without salt, a giant constrained.

Addressing this issue is one of our primary goals.

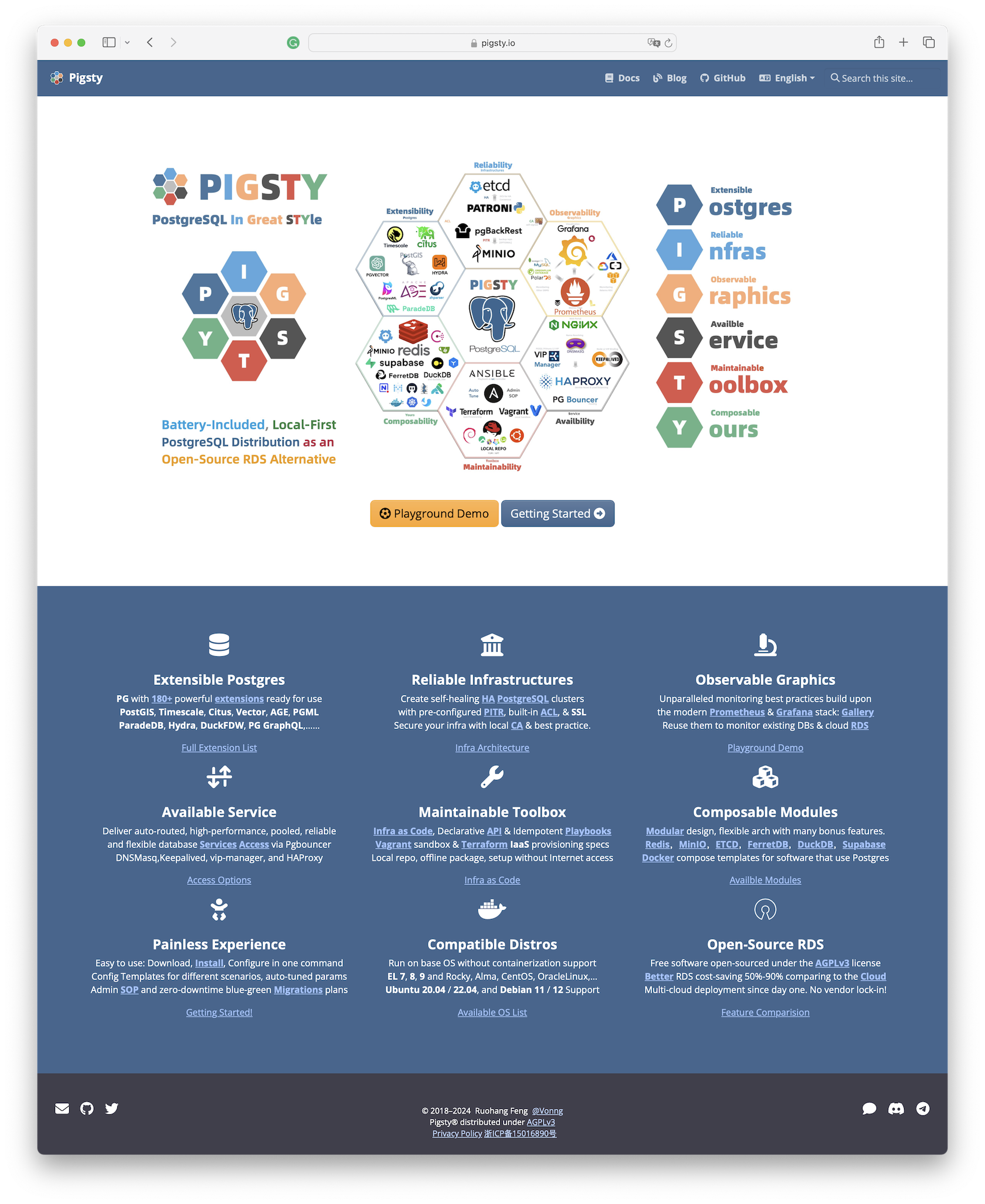

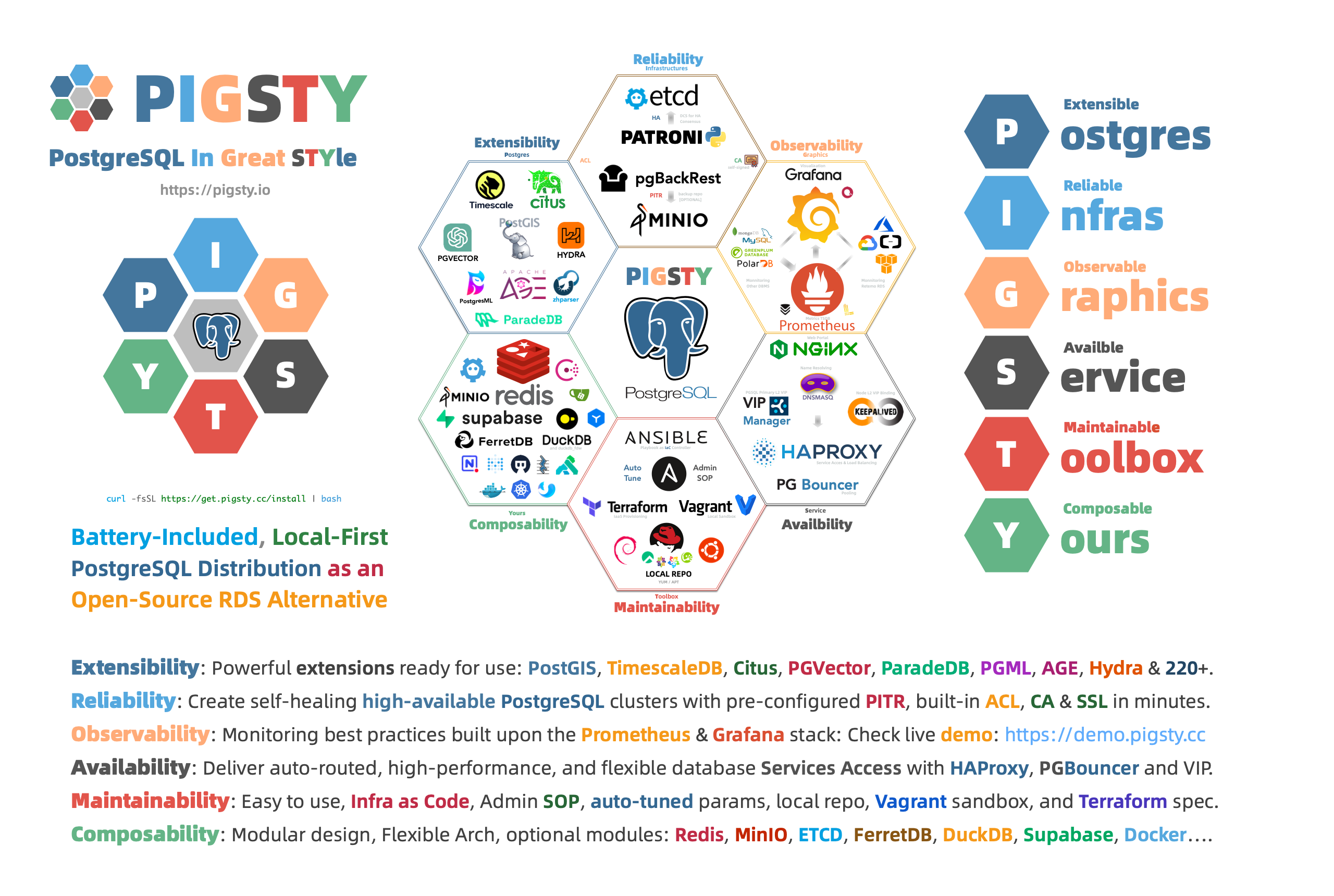

Our Resolution: Pigsty

Despite earlier exposure to MySQL Oracle, and MSSQL, when I first used PostgreSQL in 2015, I was convinced of its future dominance in the database realm. Nearly a decade later, I’ve transitioned from a user and administrator to a contributor and developer, witnessing PG’s march toward that goal.

Interactions with diverse users revealed that the database field’s shortcoming isn’t the kernel anymore — PostgreSQL is already sufficient. The real issue is leveraging the kernel’s capabilities, which is the reason behind RDS’s booming success.

However, I believe this capability should be as accessible as free software, like the PostgreSQL kernel itself — available to every user, not just renting from cyber feudal lords.

Thus, I created Pigsty, a battery-included, local-first PostgreSQL distribution as an open-source RDS Alternative, which aims to harness the collective power of PostgreSQL ecosystem extensions and democratize access to production-grade database services.

Pigsty stands for PostgreSQL in Great STYle, representing the zenith of PostgreSQL.

We’ve defined six core propositions addressing the central issues in PostgreSQL database services:

Extensible Postgres, Reliable Infras, Observable Graphics, Available Services, Maintainable Toolbox, and Composable Modules.

The initials of these value propositions offer another acronym for Pigsty:

Postgres, Infras, Graphics, Service, Toolbox, Yours.

Your graphical Postgres infrastructure service toolbox.

Extensible PostgreSQL is the linchpin of this distribution. In the recently launched Pigsty v2.6, we integrated DuckDB FDW and ParadeDB extensions, massively boosting PostgreSQL’s analytical capabilities and ensuring every user can easily harness this power.

Our aim is to integrate the strengths within the PostgreSQL ecosystem, creating a synergistic force akin to the Ubuntu of the database world. I believe the kernel debate is settled, and the real competitive frontier lies here.

- PostGIS: Provides geospatial data types and indexes, the de facto standard for GIS (& pgPointCloud, pgRouting).

- TimescaleDB: Adds time-series, continuous aggregates, distributed, columnar storage, and automatic compression capabilities.

- PGVector: Support AI vectors/embeddings and ivfflat, hnsw vector indexes (& pg_sparse for sparse vectors).

- Citus: Transforms classic master-slave PG clusters into horizontally partitioned distributed database clusters.

- Hydra: Adds columnar storage and analytics, rivaling ClickHouse’s analytic capabilities.

- ParadeDB: Elevates full-text search and mixed retrieval to ElasticSearch levels (& zhparser for Chinese tokenization).

- Apache AGE: Graph database extension, adding Neo4J-like OpenCypher query support to PostgreSQL.

- PG GraphQL: Adds native built-in GraphQL query language support to PostgreSQL.

- DuckDB FDW: Enables direct access to DuckDB’s powerful embedded analytic database files through PostgreSQL (& DuckDB CLI).

- Supabase: An open-source Firebase alternative based on PostgreSQL, providing a complete app development storage solution.

- FerretDB: An open-source MongoDB alternative based on PostgreSQL, compatible with MongoDB APIs/drivers.

- PostgresML: Facilitates classic machine learning algorithms, calling, deploying, and training AI models with SQL.

Developers, your choices will shape the future of the database world. I hope my work helps you better utilize the world’s most advanced open-source database kernel: PostgreSQL.

Read in Pigsty’s Blog | GitHub Repo: Pigsty | Official Website

Technical Minimalism: Just Use PostgreSQL for Everything

Whether production databases should be containerized remains a controversial topic. From a DBA’s perspective, I believe that currently, putting production databases in Docker is still a bad idea. Read more

New PostgreSQL Ecosystem Player: ParadeDB

ParadeDB aims to be an Elasticsearch alternative: “Modern Elasticsearch Alternative built on Postgres” — PostgreSQL for search and analytics. Read more

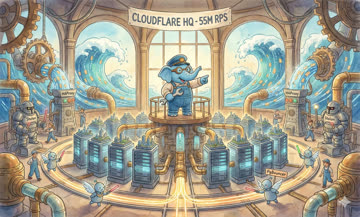

PostgreSQL's Impressive Scalability

This article describes how Cloudflare scaled to support 55 million requests per second using 15 PostgreSQL clusters, and PostgreSQL’s scalability performance. Read more

PostgreSQL Outlook for 2024

Author: Jonathan Katz (PostgreSQL Core Team) | Original: PostgreSQL 2024

PostgreSQL core team member Jonathan Katz’s outlook for PostgreSQL in 2024, reviewing the progress made over the past few years. Read more

PostgreSQL Wins 2024 Database of the Year Award! (Fifth Time)

DB-Engines officially announced today that PostgreSQL has once again been crowned “Database of the Year.” This is the fifth time PG has received this honor in the past seven years. If not for Snowflake stealing the spotlight for two years, the database world would have almost become a PostgreSQL solo show. Read more

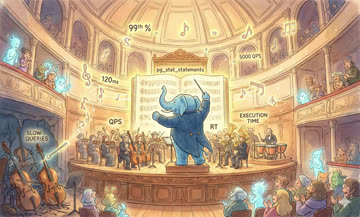

PostgreSQL Macro Query Optimization with pg_stat_statements

pg_stat_statements for macro-level PostgreSQL query optimization.Query optimization is one of the core responsibilities of DBAs. This article introduces how to use metrics provided by pg_stat_statements for macro-level PostgreSQL query optimization. Read more

FerretDB: PostgreSQL Disguised as MongoDB

FerretDB aims to provide a truly open-source MongoDB alternative based on PostgreSQL. Read more

How to Use pg_filedump for Data Recovery?

pg_filedump can help you!Backups are a DBA’s lifeline — but what if your PostgreSQL database has already exploded and you have no backups? Maybe pg_filedump can help you! Read more

Vector is the New JSON

Author: Jonathan Katz (PostgreSQL Core Team) | Original: Vectors are the new JSON in PostgreSQL

Vectors will become a key element in building applications, just like JSON historically. PostgreSQL leads the AI era with vector extensions. Read more

PostgreSQL, The most successful database

StackOverflow 2023 Survey shows PostgreSQL is the most popular, loved, and wanted database, solidifying its status as the ‘Linux of Database’. Read more

AI Large Models and Vector Database PGVector

This article focuses on vector databases hyped by AI, introduces the basic principles of AI embeddings and vector storage/retrieval, and demonstrates the functionality, performance, acquisition, and application of the vector database extension PGVECTOR through a concrete knowledge base retrieval case study. Read more

How Powerful is PostgreSQL Really?

Let performance data speak: Why PostgreSQL is the world’s most advanced open-source relational database, aka the world’s most successful database. MySQL vs PostgreSQL performance showdown and distributed database reality check. Read more

Why PostgreSQL is the Most Successful Database?

Database users are developers, but what about developers’ preferences, likes, and choices? Looking at StackOverflow survey results over the past six years, it’s clear that in 2022, PostgreSQL has won all three categories, becoming literally the “most successful database” Read more

Ready-to-Use PostgreSQL Distribution: Pigsty

Yesterday I gave a live presentation in the PostgreSQL Chinese community, introducing the open-source PostgreSQL full-stack solution — Pigsty Read more

Why Does PostgreSQL Have a Bright Future?

Databases are the core component of information systems, relational databases are the absolute backbone of databases, and PostgreSQL is the world’s most advanced open source relational database. With such favorable timing and positioning, how can it not achieve great success? Read more

Implementing Advanced Fuzzy Search

How to implement relatively complex fuzzy search logic in PostgreSQL? Read more

Localization and Collation Rules in PostgreSQL

What? Don’t know what COLLATION is? Remember one thing: using C COLLATE is always the right choice! Read more

PG Replica Identity Explained

Replica identity is important - it determines the success or failure of logical replication Read more

PostgreSQL Logical Replication Deep Dive

This article introduces the principles and best practices of logical replication in PostgreSQL 13. Read more

A Methodology for Diagnosing PostgreSQL Slow Queries

Slow queries are the sworn enemy of OLTP databases. Here’s how to identify, analyze, and fix them using metrics (Pigsty dashboards), pg_stat_statements, and logs. Read more

Incident-Report: Patroni Failure Due to Time Travel

Machine restarted due to failure, NTP service corrected PG time after PG startup, causing Patroni to fail to start. Read more

Online Primary Key Column Type Change

How to change column types online, such as upgrading from INT to BIGINT? Read more

Golden Monitoring Metrics: Errors, Latency, Throughput, Saturation

Understanding the golden monitoring metrics in PostgreSQL Read more

Database Cluster Management Concepts and Entity Naming Conventions

Concepts and their naming are very important. Naming style reflects an engineer’s understanding of system architecture. Poorly defined concepts lead to communication confusion, while carelessly set names create unexpected additional burden. Therefore, they need careful design. Read more

PostgreSQL's KPI

Managing databases is similar to managing people - both need KPIs (Key Performance Indicators). So what are database KPIs? This article introduces a way to measure PostgreSQL load: using a single horizontally comparable metric that is basically independent of workload type and machine type, called PG Load. Read more

Online PostgreSQL Column Type Migration

How to modify PostgreSQL column types online? A general approach Read more

Frontend-Backend Communication Wire Protocol

Understanding the TCP protocol used for communication between PostgreSQL server and client, and printing messages using Go Read more

Transaction Isolation Level Considerations

PostgreSQL actually has only two transaction isolation levels: Read Committed and Serializable Read more

Incident: PostgreSQL Extension Installation Causes Connection Failure

Today encountered an interesting case where a customer reported database connection issues caused by extensions. Read more

CDC Change Data Capture Mechanisms

Change Data Capture is an interesting ETL alternative solution. Read more

Locks in PostgreSQL

pg_locks.Snapshot isolation does most of the heavy lifting in PG, but locks still matter. Here’s a practical guide to table locks, row locks, intention locks, and pg_locks. Read more

O(n2) Complexity of GIN Search

When GIN indexes are used to search with very long keyword lists, performance degrades significantly. This article explains why GIN index keyword search has O(n^2) time complexity. Read more

PostgreSQL Common Replication Topology Plans

Replication is one of the core issues in system architecture. Read more

Warm Standby: Using pg_receivewal

There are various backup strategies. Physical backups can usually be divided into four types. Read more

Incident-Report: Connection-Pool Contamination Caused by pg_dump

Sometimes, interactions between components manifest in subtle ways. For example, using pg_dump to export data from a connection pool can cause connection pool contamination issues. Read more

PostgreSQL Data Page Corruption Repair

Using binary editing to repair PostgreSQL data pages, and how to make a primary key query return two records. Read more

Relation Bloat Monitoring and Management

PostgreSQL uses MVCC as its primary concurrency control technology. While it has many benefits, it also brings other effects, such as relation bloat. Read more

Getting Started with PipelineDB

PipelineDB is a PostgreSQL extension for streaming analytics. Here’s how to install it and build continuous views over live data. Read more

TimescaleDB Quick Start

TimescaleDB is a PostgreSQL extension plugin that provides time-series database functionality. Read more

Incident-Report: Integer Overflow from Rapid Sequence Number Consumption

If you use Integer sequences on tables, you should consider potential overflow scenarios. Read more

Incident-Report: PostgreSQL Transaction ID Wraparound

XID WrapAround is perhaps a unique type of failure specific to PostgreSQL Read more

GeoIP Geographic Reverse Lookup Optimization

A common requirement in application development is GeoIP conversion - converting source IP addresses to geographic coordinates or administrative divisions (country-state-city-county-town-village) Read more

PostgreSQL Trigger Usage Considerations

Detailed understanding of trigger management and usage in PostgreSQL Read more

PostgreSQL Development Convention (2018 Edition)

Without rules, there can be no order. This article compiles a development specification for PostgreSQL database principles and features, which can reduce confusion encountered when using PostgreSQL. Read more

What Are PostgreSQL's Advantages?

PostgreSQL’s slogan is “The World’s Most Advanced Open-Source Relational Database,” but I think the most vivid characterization should be: The Full-Stack Database That Does It All - one tool to rule them all. Read more

Efficient Administrative Region Lookup with PostGIS

How to efficiently solve the typical reverse geocoding problem: determining administrative regions based on user coordinates. Read more

KNN Ultimate Optimization: From RDS to PostGIS

Ultimate optimization of KNN problems, from traditional relational design to PostGIS Read more

Monitoring Table Size in PostgreSQL

Tables in PostgreSQL correspond to many physical files. This article explains how to calculate the actual size of a table in PostgreSQL. Read more

PgAdmin Installation and Configuration

PgAdmin is a GUI program for managing PostgreSQL, written in Python, but it’s quite dated and requires some additional configuration. Read more

Incident-Report: Uneven Load Avalanche

Recently there was a perplexing incident where a database had half its data volume and load migrated away, but ended up being overwhelmed due to increased load. Read more

Bash and psql Tips

Some tips for interacting between PostgreSQL and Bash. Read more

Distinct On: Remove Duplicate Data

Use Distinct On extension clause to quickly find records with maximum/minimum values within groups Read more

Function Volatility Classification Levels

PostgreSQL functions have three volatility levels by default. Proper use can significantly improve performance. Read more

Implementing Mutual Exclusion Constraints with Exclude

Exclude constraint is a PostgreSQL extension that can implement more advanced and sophisticated database constraints. Read more

PostgreSQL Routine Maintenance

Cars need oil changes, databases need maintenance. For PG, three important maintenance tasks: backup, repack, vacuum Read more

Backup and Recovery Methods Overview

Backup is the foundation of a DBA’s livelihood. With backups, there’s no need to panic. Read more

PgBackRest2 Documentation

PgBackRest is a set of PostgreSQL backup tools written in Perl Read more

Pgbouncer Quick Start

Pgbouncer is a lightweight database connection pool. This guide covers basic Pgbouncer configuration, management, and usage. Read more

PostgreSQL Server Log Regular Configuration

It’s recommended to configure PostgreSQL’s log format as CSV for easy analysis, and it can be directly imported into PostgreSQL data tables. Read more

Testing Disk Performance with FIO

FIO is a convenient tool for testing disk I/O performance Read more

Using sysbench to Test PostgreSQL Performance

Although PostgreSQL provides pgbench, sometimes you need sysbench to outperform MySQL. Read more

Changing Engines Mid-Flight — PostgreSQL Zero-Downtime Data Migration

Data migration typically involves stopping services for updates. Zero-downtime data migration is a relatively advanced operation. Read more

Finding Unused Indexes

Indexes are useful, but they’re not free. Unused indexes are a waste. Use these methods to identify unused indexes. Read more

Batch Configure SSH Passwordless Login

Quick configuration for passwordless login to all machines Read more

Wireshark Packet Capture Protocol Analysis

Wireshark is a very useful tool, especially suitable for analyzing network protocols. Here’s a simple introduction to using Wireshark for packet capture and PostgreSQL protocol analysis. Read more

The Versatile file_fdw — Reading System Information from Your Database

file_fdw, you can easily view operating system information, fetch network data, and feed various data sources into your database for unified viewing and management.With file_fdw, you can easily view operating system information, fetch network data, and feed various data sources into your database for unified viewing and management. Read more

Common Linux Statistics CLI Tools

top, free, vmstat, iostat: Quick reference for four commonly used CLI tools Read more

Installing PostGIS from Source

PostGIS is PostgreSQL’s killer extension, but compiling and installing it isn’t easy. Read more

Go Database Tutorial: database/sql

Similar to JDBC, Go also has a standard database access interface. This article details how to use database/sql in Go and important considerations. Read more

Implementing Cache Synchronization with Go and PostgreSQL

Cleverly utilizing PostgreSQL’s Notify feature, you can conveniently notify applications of metadata changes and implement trigger-based logical replication. Read more

Auditing Data Changes with Triggers

Sometimes we want to record important metadata changes for audit purposes. PostgreSQL triggers can conveniently solve this need automatically. Read more

Building an ItemCF Recommender in Pure SQL

Five minutes, PostgreSQL, and the MovieLens dataset—that’s all you need to implement a classic item-based collaborative filtering recommender. Read more

UUID Properties, Principles and Applications

UUID properties, principles and applications, and how to manipulate UUIDs using PostgreSQL stored procedures. Read more

PostgreSQL MongoFDW Installation and Deployment

Recently had business requirements to access MongoDB through PostgreSQL FDW, but compiling MongoDB FDW is really a nightmare. Read more