Citus (Distributive)

Module:

Beware that citus for the latest major version PostgreSQL 17 support is still WIP

Pigsty has native citus support:

Install

Citus is a standard PostgreSQL extension, which can be installed and enabled on a native PostgreSQL cluster by following the standard plugin installation process.

To install it manually, you can run the following command:

./pgsql.yml -t pg_extension -e '{"pg_extensions":["citus"]}'

Configuration

To define a citus cluster, you have to specify the following parameters:

pg_modehas to be set tocitusinstead of defaultpgsqlpg_shard&pg_grouphas to be defined on each sharding clusterpg_primary_dbhas to be defined to specify the database to be managedpg_dbsu_passwordhas to be set to a non-empty string plain password if you want to use thepg_dbsupostgresrather than defaultpg_admin_usernameto perform admin commands

Besides, extra hba rules that allow ssl access from local & other data nodes are required. Which may looks like this

You can define each citus cluster separately within a group, like conf/dbms/citus.yml :

all:

children:

pg-citus0: # citus data node 0

hosts: { 10.10.10.10: { pg_seq: 1, pg_role: primary } }

vars: { pg_cluster: pg-citus0 , pg_group: 0 }

pg-citus1: # citus data node 1

hosts: { 10.10.10.11: { pg_seq: 1, pg_role: primary } }

vars: { pg_cluster: pg-citus1 , pg_group: 1 }

pg-citus2: # citus data node 2

hosts: { 10.10.10.12: { pg_seq: 1, pg_role: primary } }

vars: { pg_cluster: pg-citus2 , pg_group: 2 }

pg-citus3: # citus data node 3, with an extra replica

hosts:

10.10.10.13: { pg_seq: 1, pg_role: primary }

10.10.10.14: { pg_seq: 2, pg_role: replica }

vars: { pg_cluster: pg-citus3 , pg_group: 3 }

vars: # global parameters for all citus clusters

pg_mode: citus # pgsql cluster mode: citus

pg_shard: pg-citus # citus shard name: pg-citus

patroni_citus_db: meta # citus distributed database name

pg_dbsu_password: DBUser.Postgres # all dbsu password access for citus cluster

pg_users: [ { name: dbuser_meta ,password: DBUser.Meta ,pgbouncer: true ,roles: [ dbrole_admin ] } ]

pg_databases: [ { name: meta ,extensions: [ { name: citus }, { name: postgis }, { name: timescaledb } ] } ]

pg_hba_rules:

- { user: 'all' ,db: all ,addr: 127.0.0.1/32 ,auth: ssl ,title: 'all user ssl access from localhost' }

- { user: 'all' ,db: all ,addr: intra ,auth: ssl ,title: 'all user ssl access from intranet' }

You can also specify all citus cluster members within a group, take prod.yml for example.

#==========================================================#

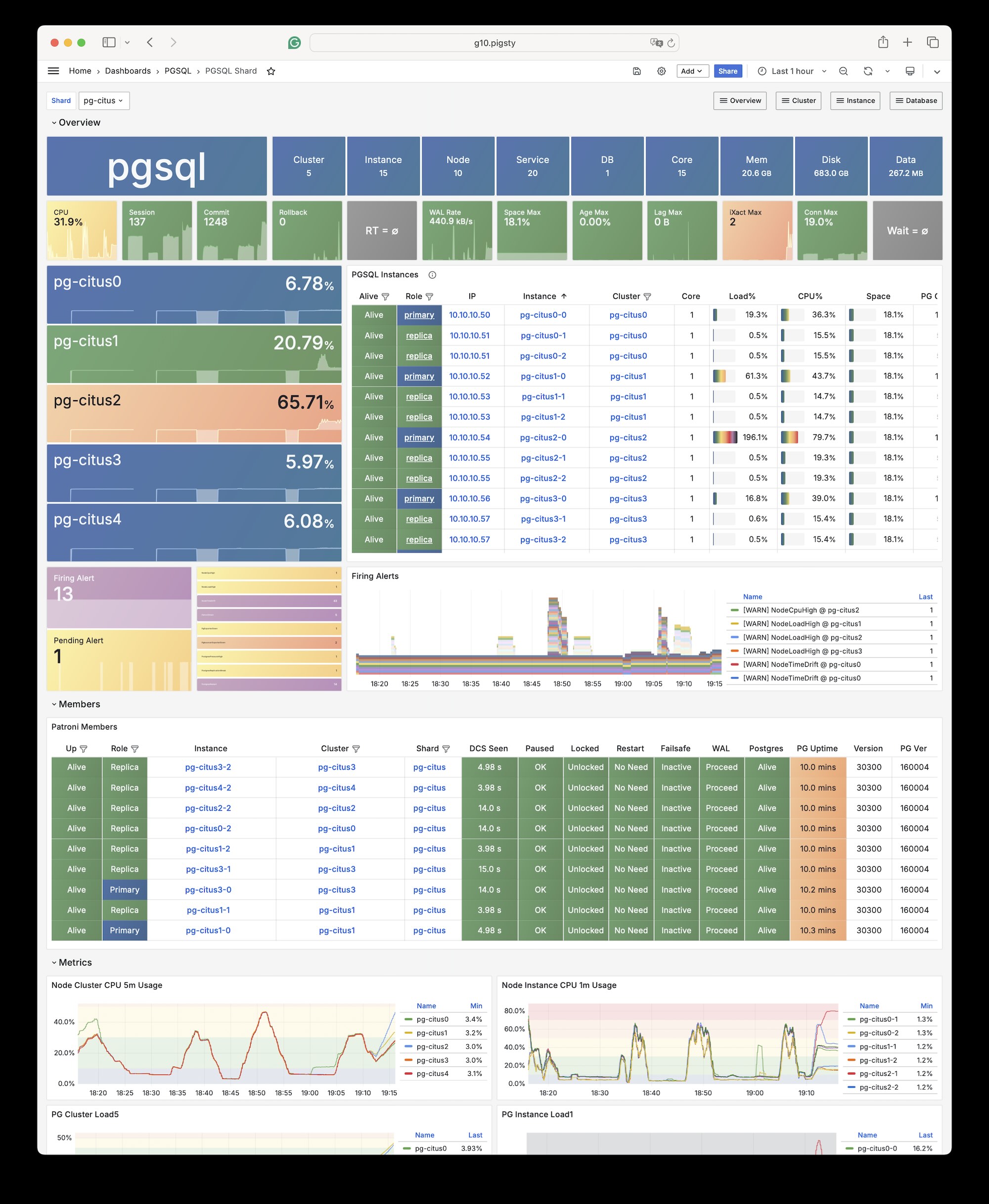

# pg-citus: 10 node citus cluster (5 x primary-replica pair)

#==========================================================#

pg-citus: # citus group

hosts:

10.10.10.50: { pg_group: 0, pg_cluster: pg-citus0 ,pg_vip_address: 10.10.10.60/24 ,pg_seq: 0, pg_role: primary }

10.10.10.51: { pg_group: 0, pg_cluster: pg-citus0 ,pg_vip_address: 10.10.10.60/24 ,pg_seq: 1, pg_role: replica }

10.10.10.52: { pg_group: 1, pg_cluster: pg-citus1 ,pg_vip_address: 10.10.10.61/24 ,pg_seq: 0, pg_role: primary }

10.10.10.53: { pg_group: 1, pg_cluster: pg-citus1 ,pg_vip_address: 10.10.10.61/24 ,pg_seq: 1, pg_role: replica }

10.10.10.54: { pg_group: 2, pg_cluster: pg-citus2 ,pg_vip_address: 10.10.10.62/24 ,pg_seq: 0, pg_role: primary }

10.10.10.55: { pg_group: 2, pg_cluster: pg-citus2 ,pg_vip_address: 10.10.10.62/24 ,pg_seq: 1, pg_role: replica }

10.10.10.56: { pg_group: 3, pg_cluster: pg-citus3 ,pg_vip_address: 10.10.10.63/24 ,pg_seq: 0, pg_role: primary }

10.10.10.57: { pg_group: 3, pg_cluster: pg-citus3 ,pg_vip_address: 10.10.10.63/24 ,pg_seq: 1, pg_role: replica }

10.10.10.58: { pg_group: 4, pg_cluster: pg-citus4 ,pg_vip_address: 10.10.10.64/24 ,pg_seq: 0, pg_role: primary }

10.10.10.59: { pg_group: 4, pg_cluster: pg-citus4 ,pg_vip_address: 10.10.10.64/24 ,pg_seq: 1, pg_role: replica }

vars:

pg_mode: citus # pgsql cluster mode: citus

pg_shard: pg-citus # citus shard name: pg-citus

pg_primary_db: test # primary database used by citus

pg_dbsu_password: DBUser.Postgres # all dbsu password access for citus cluster

pg_vip_enabled: true

pg_vip_interface: eth1

pg_extensions: [ 'citus postgis timescaledb pgvector' ]

pg_libs: 'citus, timescaledb, pg_stat_statements, auto_explain' # citus will be added by patroni automatically

pg_users: [ { name: test ,password: test ,pgbouncer: true ,roles: [ dbrole_admin ] } ]

pg_databases: [ { name: test ,owner: test ,extensions: [ { name: citus }, { name: postgis } ] } ]

pg_hba_rules:

- { user: 'all' ,db: all ,addr: 10.10.10.0/24 ,auth: trust ,title: 'trust citus cluster members' }

- { user: 'all' ,db: all ,addr: 127.0.0.1/32 ,auth: ssl ,title: 'all user ssl access from localhost' }

- { user: 'all' ,db: all ,addr: intra ,auth: ssl ,title: 'all user ssl access from intranet' }

And you can create distributed table & reference table on the coordinator node. Any data node can be used as the coordinator node since citus 11.2.

Usage

You can access any (primary) node in the cluster as you would with a regular cluster:

pgbench -i postgres://test:test@pg-citus0/test

pgbench -nv -P1 -T1000 -c 2 postgres://test:test@pg-citus0/test

By default, any changes you make to a shard only occur on that cluster, not on other shards.

If you want to distribute a table, you can use the following command:

psql -h pg-citus0 -d test -c "SELECT create_distributed_table('pgbench_accounts', 'aid'); SELECT truncate_local_data_after_distributing_table('public.pgbench_accounts');"

psql -h pg-citus0 -d test -c "SELECT create_reference_table('pgbench_branches') ; SELECT truncate_local_data_after_distributing_table('public.pgbench_branches');"

psql -h pg-citus0 -d test -c "SELECT create_reference_table('pgbench_history') ; SELECT truncate_local_data_after_distributing_table('public.pgbench_history');"

psql -h pg-citus0 -d test -c "SELECT create_reference_table('pgbench_tellers') ; SELECT truncate_local_data_after_distributing_table('public.pgbench_tellers');"

There are two types of tables you can create:

- distributed tables (automatic partitioning, need to specify partition key)

- reference tables (full replication: no need to specify partition key)

Starting from Citus 11.2, any Citus database node can act as a coordinator, meaning any primary node can write.

For example, your changes will be visible on other nodes:

psql -h pg-citus1 -d test -c '\dt+'

And your scan will be distributed:

vagrant@meta-1:~$ psql -h pg-citus3 -d test -c 'explain select * from pgbench_accounts'

QUERY PLAN

---------------------------------------------------------------------------------------------------------

Custom Scan (Citus Adaptive) (cost=0.00..0.00 rows=100000 width=352)

Task Count: 32

Tasks Shown: One of 32

-> Task

Node: host=10.10.10.52 port=5432 dbname=test

-> Seq Scan on pgbench_accounts_102008 pgbench_accounts (cost=0.00..81.66 rows=3066 width=97)

(6 rows)

You can issue writes from different primary nodes:

pgbench -nv -P1 -T1000 -c 2 postgres://test:test@pg-citus1/test

pgbench -nv -P1 -T1000 -c 2 postgres://test:test@pg-citus2/test

pgbench -nv -P1 -T1000 -c 2 postgres://test:test@pg-citus3/test

pgbench -nv -P1 -T1000 -c 2 postgres://test:test@pg-citus4/test

And in case of primary node failure, the replica will take over with native patroni support:

test=# select * from pg_dist_node;

nodeid | groupid | nodename | nodeport | noderack | hasmetadata | isactive | noderole | nodecluster | metadatasynced | shouldhaveshards

--------+---------+-------------+----------+----------+-------------+----------+----------+-------------+----------------+------------------

1 | 0 | 10.10.10.51 | 5432 | default | t | t | primary | default | t | f

2 | 2 | 10.10.10.54 | 5432 | default | t | t | primary | default | t | t

5 | 1 | 10.10.10.52 | 5432 | default | t | t | primary | default | t | t

3 | 4 | 10.10.10.58 | 5432 | default | t | t | primary | default | t | t

4 | 3 | 10.10.10.56 | 5432 | default | t | t | primary | default | t | t

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.